Arm Unveils Cortex-X4 And Immortalis GPU For Big Mobile Performance And Efficiency Gains

Arm's Total Compute Solution For 2023

Arm is announcing its latest CPU and GPU designs, which will power future generations of smartphones, tablets, IoT devices, and even some laptops. Arm licenses these designs to chipmakers like Qualcomm, MediaTek, Samsung, and countless others for integration into their own solutions. We were invited to the firm’s Client Tech Days, where we learned the architectural details, design philosophies, and intended applications for these upcoming products.

Arm framed its presentations around the surging demand for performant mobile solutions, and a growing diversity of formfactors and workloads. This culminates in more developers than ever targeting Arm devices, spanning beyond mobile into other spaces like data centers and automotive workloads.

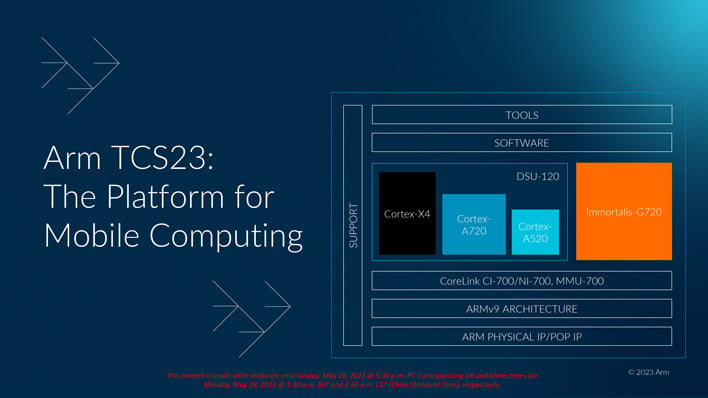

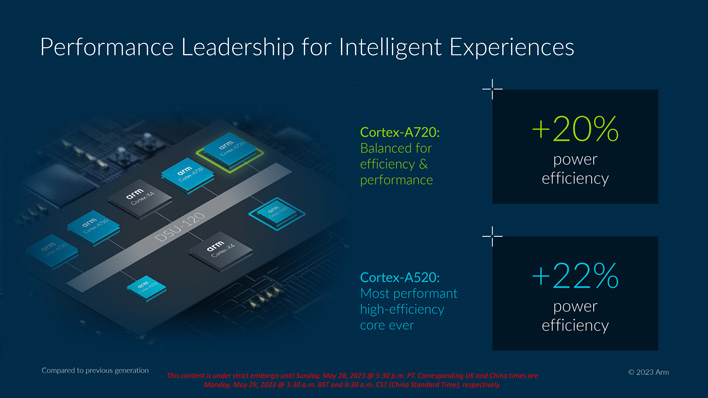

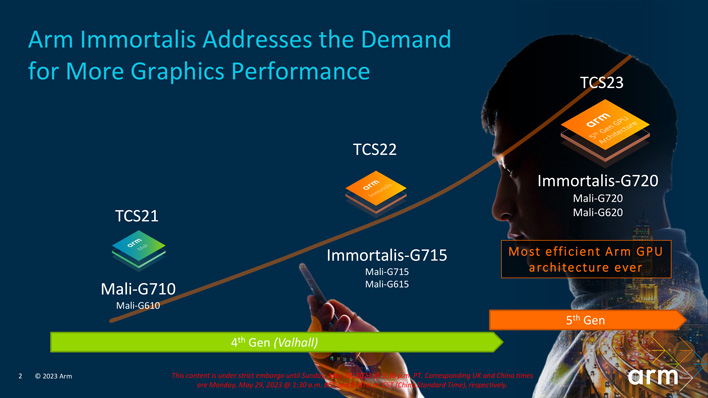

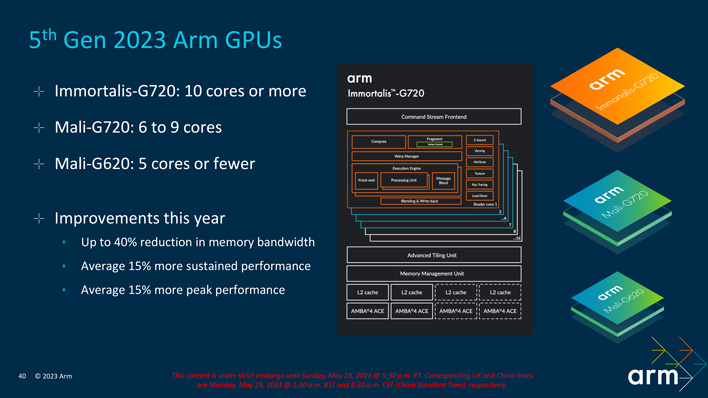

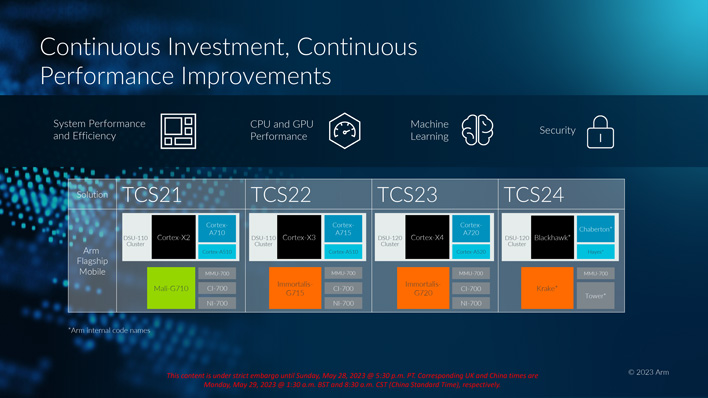

While Arm does not produce chips itself, it develops a reference Total Compute Solution (TCS) platform to provide its customers a starting point for their own implementations. TCS23 spans three levels of CPU cores: the Cortex-X4, Cortex-A720, and Cortex-520. Each of these core designs is tailored to a different slice of workloads and can work in concert for the full system solution. Each of these is built on the Armv9.2 architecture, which affords several performance optimizations and security enhancements. The TCS23 platform also offers GPU designs which include the new flagship Immortalis-G720, Mali-G720, and Mali-G620 options.

As per usual, Arm is claiming improvements to performance and efficiency with these latest designs. The details about these gains vary by solution and implementation, which we will get into later, but at a high level, the new CPU cores can deliver upwards of 20% energy savings at equivalent performance to the prior generation, or more performance where power budgets can remain constant. Likewise, the Immortalis-G720 flagship GPU solution can offer 15% more performance while reducing memory bandwidth usage by 40% through a change in its rendering pipeline.

Arm is working more closely than ever with chip fabs like TSMC to optimize its designs for leading process nodes. A better understanding of process tech intricacies and early development feedback helps Arm's customers get their products to market more quickly. As part of this, Arm achieved the industry first tape out of a Cortex-X4 core using TSMC's N3E process.

"Our latest collaboration with Arm is an excellent showcase of how we can enable our customers to reach new levels of performance and efficiency with TSMC’s most advanced process technology and the powerful Armv9 architecture. We will continue to work closely with our Open Innovation Platform (OIP) ecosystem partners like Arm to push the envelope on CPU innovations to accelerate AI, 5G, and HPC technology advances," said Dan Kochpatcharin, Head of the Design Infrastructure Management Division at TSMC.

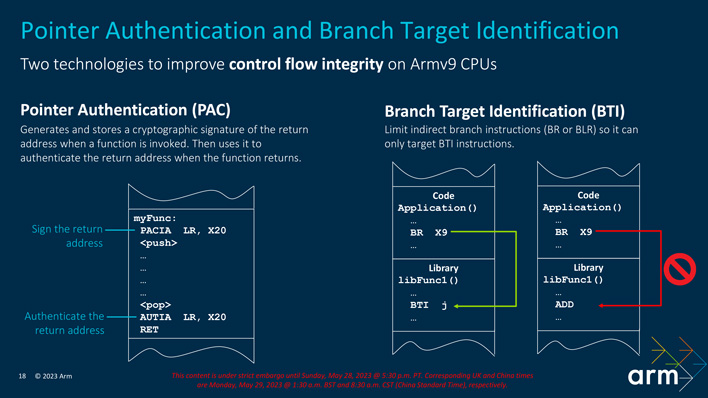

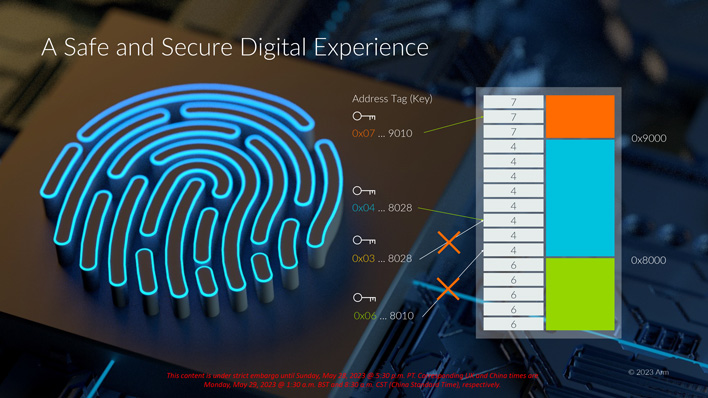

PAC and BTI both help reduce the footprint of exploitable code. Even if an attacker manages to escape a sandbox, PAC uses cryptographic signatures on memory addresses when a function is invoked so it cannot return to the wrong place, due to something like a link register overwrite. Similarly, BTI restricts entry points for functions, preventing an attacker from arbitrarily executing a selected portion of code as part of an exploit.

MTEs help to prevent things like a sandbox breakout to begin with, by generating a tag when memory is allocated and then checking it with each load/store operation. Memory security issues have been among the fastest growing threat vectors. Arm cited an unnamed community app which claims MTE allows it to detect 90% of memory security issues prior to release.

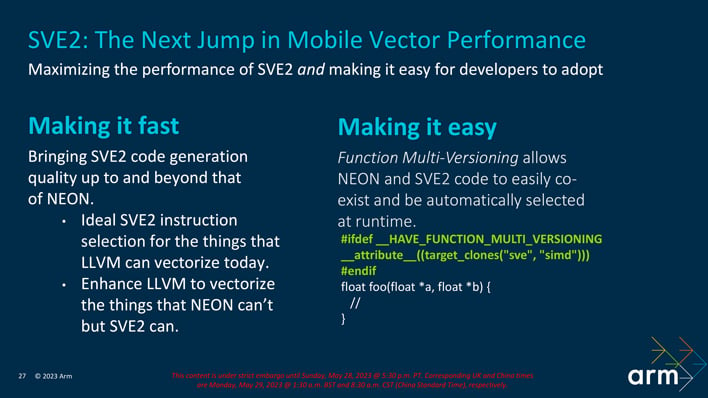

Armv9.2 also adds support for Scalable Vector Extensions Version 2 (SVE2). SVE2 is a single instruction multiple data (SIMD) instruction set extension for AArch64, which is a superset of SVE and Neon instructions. This is useful for highly parallel workloads like image processing. Arm has focused on making SVE2 code generation as fast as, or faster than, Neon to incentivize adoption. In addition, developers can markup code where they want both Neon and SVE2 versions generated, which can then be selected at runtime. This allows support for older architecture via Neon while next generation devices can benefit from SVE2 all without significant overhead or rewriting code bases.

In a given example of using indirect time-of-flight capture of a 3D scene compared with Neon, Arm indicates SVE2 was 10% better at FP32 and 23% better at FP16. Most of this is attributed to the gather-scatter addressing instruction efficiency in SVE2 versus Neon.

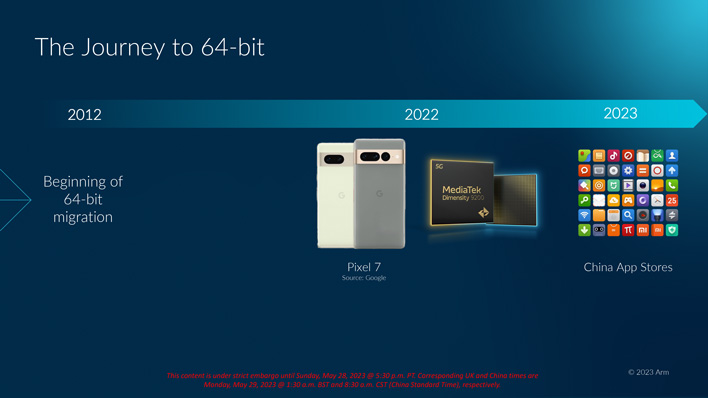

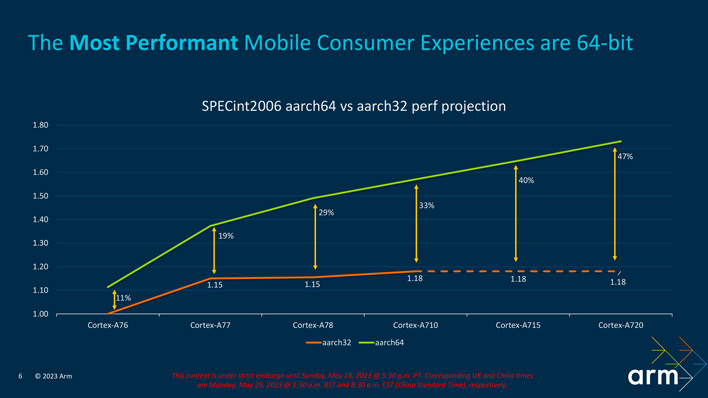

TCS23 finally drops support for AArch32 across the board, which was still supported in the prior-gen Cortex-A710 Armv9 core. The transition to 64-bit has been a decade long effort and required coordination with a wide variety of players from Google and its hardware partners, to various app store operators and application developers.

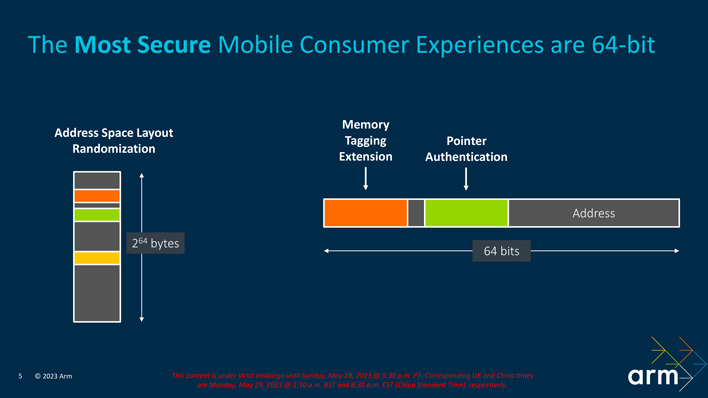

A 64-bit exclusive solution enhances security through its much larger address space, where techniques like address space randomization reduce the odds of a bad actor snooping on running workloads. 64-bit addresses also have spare space that can be used for signed pointers, MTE, and other uses.

Arm employees remarked that die area savings was not a significant consideration. Gutting 32-bit features only provides “single digit percentage” reductions. Nevertheless, it significantly reduces complexity, testing, and other requirements in addition to the aforementioned security advantages. These less tangible savings can be reinvested into performance and efficiency gains throughout the system to scale beyond where 32-bit architecture has otherwise plateaued.

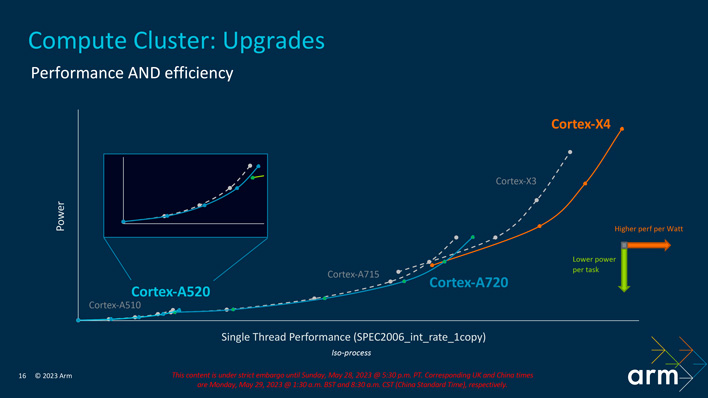

The efficiency curves of these designs complement each other well to provide suitable coverage for various operating points. The high-end X-series cores perform the heaviest lifting, the middle A700-series cores focus on sustained performance, and the little A500-series cores put efficiency above all else for background tasks.

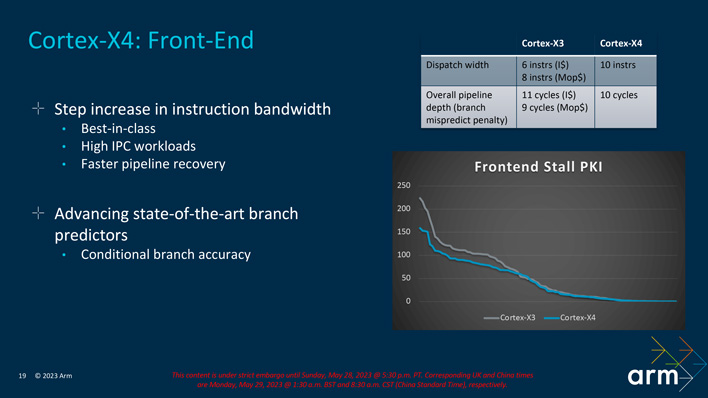

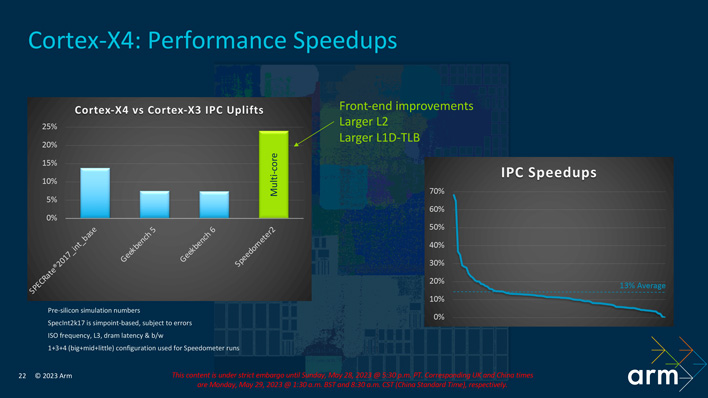

The Cortex-X4 is further fed by a redesigned instruction fetch delivery system. It is now a 10-wide core, delivering best-in-class bandwidth for high IPC workloads. The branch prediction itself has been further refined over the Cortex-X3 which particularly reduces stalls in real-world workloads. The graph below depicts less-predictable workloads on the left and moves towards more synthetic workloads on the right.

The number of ALUs has also grown from 6 to 8. The MCQ reorder buffer has also been expanded from 320x2 to 384x2 which allows more out of order instructions to be tracked and it now treats a load-store flush like a branch mispredict for faster handling.

Arm is claiming an average IPC speedup of 13% across a range of workloads, but it is again real-world scenarios that will see the greatest advantages. Synthetic benchmarks receive less benefits from the front-end and cache changes and as such see less improvement.

Alternatively, Arm offers an area-optimized configuration of the Cortex-A720. At a die size matching the Cortex-A78 Armv8 core, the area-optimized Cortex-A720 can provide 10% more performance and the newer Armv9.2 features.

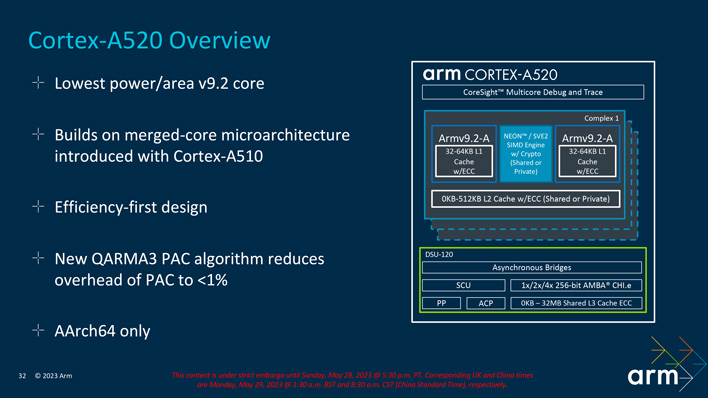

It retains the merged-core architecture used by the Cortex-A510 which places two cores in a complex with a single pool of shared or private L2 cache (up to 512KB) and SIMD engine (SVE2/Neon). It incorporates the QARMA3 PAC algorithm to reduce overhead to less than 1%, allowing it to capitalize on the latest security features without a performance penalty.

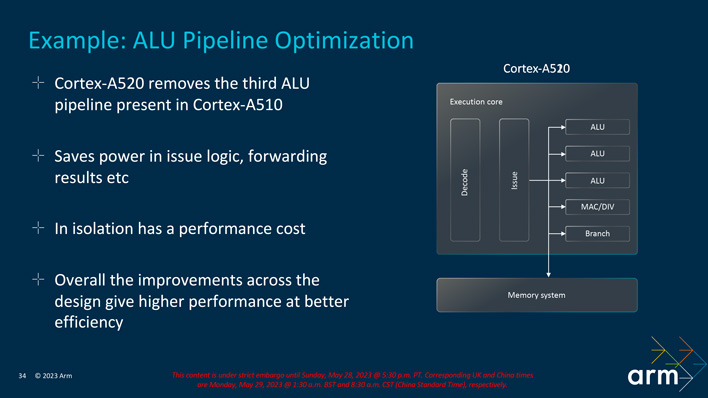

Interestingly, the Cortex-A520 reduces its ALU count from 3 to 2 from the prior-gen Cortex-A510. Alone, this would incur a performance penalty, but the savings in power and area allowed its engineers to push performance in other ways.

This culminates in 8% higher performance with a very slight reduction in power. Keeping performance constant, the Cortex-A520 can operate with 22% less power than the Cortex-A510.

While a single DSU-120 cluster is more than sufficient for most mobile designs, customers do have the option to link multiple clusters together for even higher core counts, all linked across the high-bandwidth CoreLink coherent interconnect. There’s no practical upper limit to the number of clusters that can be chained together, but chips can only be made so large. At any rate, we don’t expect this to be a popular option for ARM-based solutions.

The DSU-120’s logic, L3 cache, and snoop filter are divided into slices (up to 8), linked with a dual bidirectional ring-based topology. This reduces latency by generally reducing hops and allows for higher bandwidth.

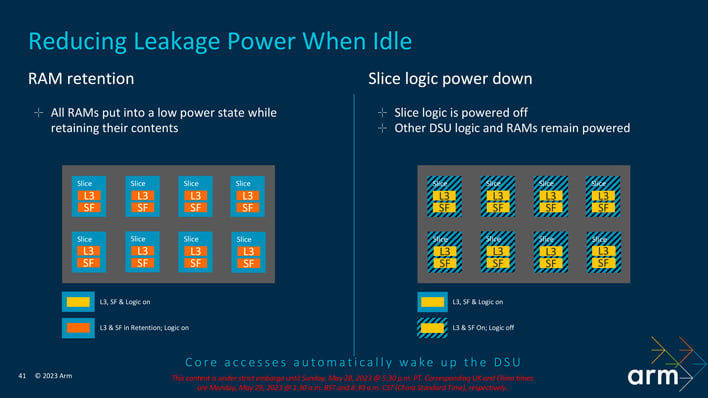

The DSU-120 further helps the system’s efficiency with a variety of powersaving modes. RAM retention places the L3 cache and snoop filter into a low power state that is quick to wake while the logic portion remains active. Alternatively, slice logic power down powers off the logic of each slice while the L3 cache and snoop filter remain active. These two modes can be combined as well but are controlled independently.

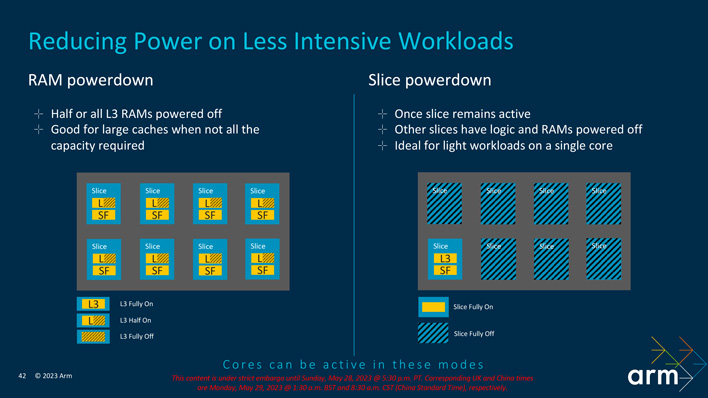

In addition, RAM powerdown can power off half or all of each L3 cache pool, but doing so dumps the contents of the powered off regions. This saves even more power when the total cache capacity is not needed. Slice powerdown fully shuts off slices (logic, L3 cache, and snoop filter) down to a single active slice.

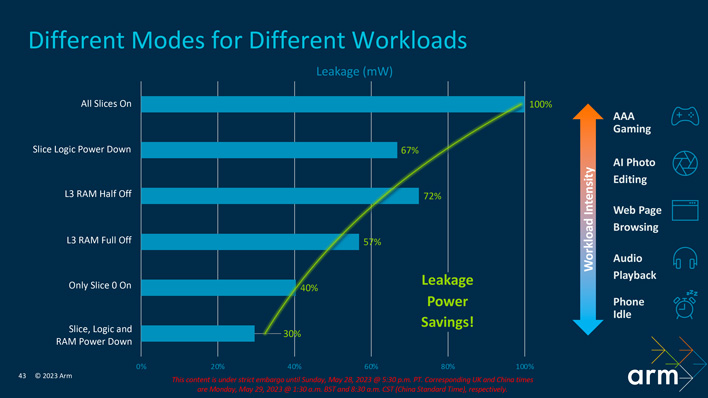

In total, these power modes can reduce the DSU’s power draw by two-thirds during idle or low-intensity workloads.

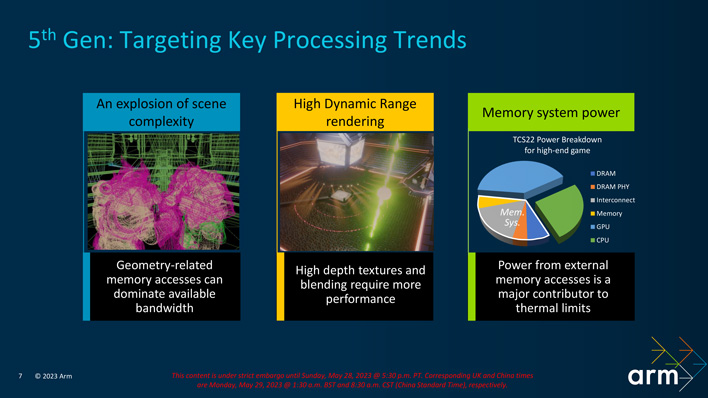

Arm’s design goal is to allow more immersive games and real-time 3D apps, and to allow these experiences to run for longer without throttling or having to run to a power outlet. In particular, developers are creating scenes with greater geometric complexity, employing more high-dynamic range rendering, and memory system power is becoming a major contributor to thermal limits.

This last point is the largest target of Arm’s attention. The 5th Gen GPU architecture reduces memory bandwidth usage by up to 40%. This is primarily accomplished through larger buffers and the implementation of Deferred Vertex Shading (DVS) in the rendering pipeline.

At a high level, a visible scene of triangles is sorted into regions called tiles for processing. Some triangles can span tiles, which complicates matters and requires traditional upfront vertex shading pipelines which have to cache a large amount of data. Triangles that are wholly contained in a tile, however, can get away with performing minimal work up front to flatten the perspective, but can discard the data and only start vertex shading in the deferred phase of the pipeline.

DVS brings vertex and fragment shading together, reduces mis-caches, and only writes back to memory once which saves a lot of memory bandwidth.

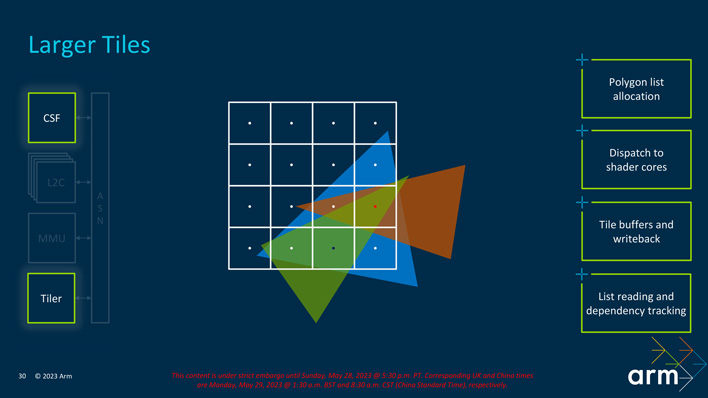

Arm’s 5th Gen GPU architecture uses larger tile sizes (64x64 vs 32x32) which means there are more opportunities for the Tiler to select DVS. In addition, increased scene complexity makes for smaller triangles and therefore even more opportunities to defer the vertex shading and save memory bandwidth.

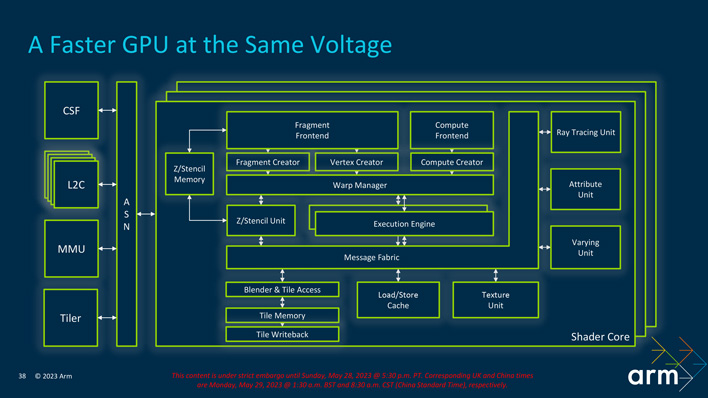

The new GPU architecture carries other improvements throughout the engine as well. It can perform variable rate shading at higher rates, performs faster work dispatch with extra working registers, and supports more fixed-function throughput for graphics. The ray tracing unit (RTU) is also on a power island now, which means less power leakage for most applications that do not engage the RTUs at all.

The three GPU models are configurable, but largely defined by core counts. The Immortalis-G720 has 10 or more cores, the Mali-G720 includes 6 to 9 cores, and the Mali-G620 is for designs with 5 or fewer cores. In addition to the 40% memory bandwidth reduction, these designs average 15% more sustained and peak performance over the prior generation.

The ADPF Hint API is designed to go beyond the default Linux scheduler to both avoid a delay in ramping up performance and avoid wasting power once a workload has ended.

The API allows an application to better inform the operating system about a workload’s target and actual CPU duration so it can schedule more efficiently. The end result for the user can be a dramatic reduction in frame drops and some level of power savings.

The ADPF Thermal API affords more ways to scale performance when thermal constraints are met than simply reducing framerate. Instead, the application can respond with other options like adaptive resolution, adaptive decals, or adaptive LOD (Level of Detail) to tune the user experience in less jarring ways.

With the example of Candy Clash given on the Pixel 6, the ADPF Thermal API resulted in 25% higher average FPS which is good, but the consistency of framerate shown is far more important. In addition, it resulted in up to an 18% reduction in CPU power which can either be redistributed to the GPU or preserved for longer gaming sessions.

Arm shared this slide of its future roadmap. It depicts TCS24 with the Cortex-X4 successor's codename as Blackhawk, supported by the Chaberton and Hayes cores at the A7XX and A5XX levels, respectively. We also see Krake listed as the codename for its next-generation GPU. Arm's press release reads, "We’ve never been more committed to our CPU and GPU roadmap and over the next few years we’ll invest heavily in key IP, such as the Krake GPU and the Blackhawk CPU to deliver the compute and graphics performance our partners demand." We certainly look forward to seeing how Arm's IP continues to evolve, particularly as AI and machine learning is proving to be more and more disruptive.

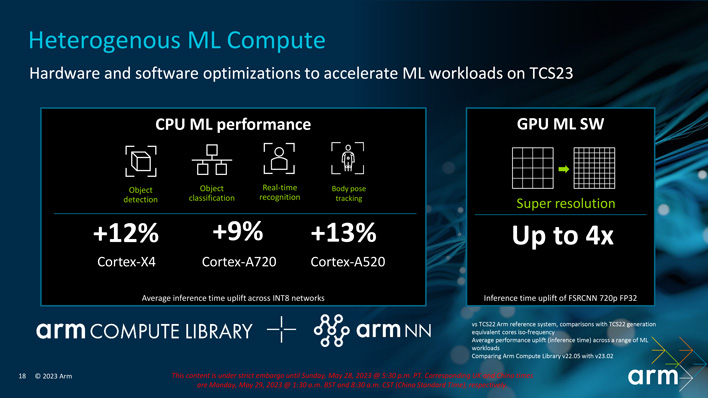

AI comprised a surprisingly small portion of Arm's presentations this year. The company has not announced a follow-up to its NPU, instead electing to allow customers to differentiate with their own solutions. The generative and LLM AI surge in particular has resulted in shifts that make dedicated hardware solutions less attractive for now. Many of these workloads are being handled by traditional CPUs and GPUs at the edge currently, at least until the industry can recoalesce on new standards. Larger workloads, meanwhile, are being pushed to datacenters where the economies of scale are currently allowing greater efficiency.

Arm's partners are very excited for the potential of these next-generation designs, particularly for gaming and to enable new use-cases. Dave Burke, VP of Engineering at Android, said, "Together with the developer community, Android is committed to bringing the power of computing to as many people as possible. We're excited to see how Arm's new hardware advancements are adopted by vendors, with security and performance improvements that can benefit the Android ecosystem as a whole."

"Arm’s innovative 2023 IP, the Cortex-X4 and Cortex-A720, and Immortalis G720 have provided an excellent foundation for our next-generation Dimensity flagship 5G smartphone chip, which will deliver impressive performance and efficiency through groundbreaking chip architecture and technical innovations. Using Arm's industry-leading technologies, MediaTek Dimensity will enable users to do more at once than ever before and unlock incredible new experiences, longer gaming sessions, and excellent battery life," added Dr. JC Hsu, Corporate Senior Vice President and General Manager of Wireless Communications Business Unit at MediaTek.

We look forward to seeing the products this new IP can develop into, and we should begin seeing new solutions leveraging it enter the market sometime next year.

Arm framed its presentations around the surging demand for performant mobile solutions, and a growing diversity of formfactors and workloads. This culminates in more developers than ever targeting Arm devices, spanning beyond mobile into other spaces like data centers and automotive workloads.

While Arm does not produce chips itself, it develops a reference Total Compute Solution (TCS) platform to provide its customers a starting point for their own implementations. TCS23 spans three levels of CPU cores: the Cortex-X4, Cortex-A720, and Cortex-520. Each of these core designs is tailored to a different slice of workloads and can work in concert for the full system solution. Each of these is built on the Armv9.2 architecture, which affords several performance optimizations and security enhancements. The TCS23 platform also offers GPU designs which include the new flagship Immortalis-G720, Mali-G720, and Mali-G620 options.

As per usual, Arm is claiming improvements to performance and efficiency with these latest designs. The details about these gains vary by solution and implementation, which we will get into later, but at a high level, the new CPU cores can deliver upwards of 20% energy savings at equivalent performance to the prior generation, or more performance where power budgets can remain constant. Likewise, the Immortalis-G720 flagship GPU solution can offer 15% more performance while reducing memory bandwidth usage by 40% through a change in its rendering pipeline.

Arm is working more closely than ever with chip fabs like TSMC to optimize its designs for leading process nodes. A better understanding of process tech intricacies and early development feedback helps Arm's customers get their products to market more quickly. As part of this, Arm achieved the industry first tape out of a Cortex-X4 core using TSMC's N3E process.

"Our latest collaboration with Arm is an excellent showcase of how we can enable our customers to reach new levels of performance and efficiency with TSMC’s most advanced process technology and the powerful Armv9 architecture. We will continue to work closely with our Open Innovation Platform (OIP) ecosystem partners like Arm to push the envelope on CPU innovations to accelerate AI, 5G, and HPC technology advances," said Dan Kochpatcharin, Head of the Design Infrastructure Management Division at TSMC.

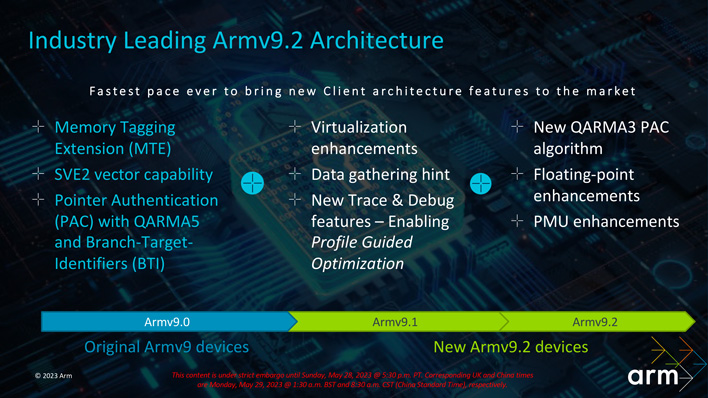

Armv9.2 Enhancements

Armv9.2’s standout additions include Pointer Authentication (PAC) and Branch Target Identifiers (BTI) along with improvements to Memory Tagging Extensions (MTE) in hardware to significantly bolster security.PAC and BTI both help reduce the footprint of exploitable code. Even if an attacker manages to escape a sandbox, PAC uses cryptographic signatures on memory addresses when a function is invoked so it cannot return to the wrong place, due to something like a link register overwrite. Similarly, BTI restricts entry points for functions, preventing an attacker from arbitrarily executing a selected portion of code as part of an exploit.

MTEs help to prevent things like a sandbox breakout to begin with, by generating a tag when memory is allocated and then checking it with each load/store operation. Memory security issues have been among the fastest growing threat vectors. Arm cited an unnamed community app which claims MTE allows it to detect 90% of memory security issues prior to release.

Armv9.2 also adds support for Scalable Vector Extensions Version 2 (SVE2). SVE2 is a single instruction multiple data (SIMD) instruction set extension for AArch64, which is a superset of SVE and Neon instructions. This is useful for highly parallel workloads like image processing. Arm has focused on making SVE2 code generation as fast as, or faster than, Neon to incentivize adoption. In addition, developers can markup code where they want both Neon and SVE2 versions generated, which can then be selected at runtime. This allows support for older architecture via Neon while next generation devices can benefit from SVE2 all without significant overhead or rewriting code bases.

In a given example of using indirect time-of-flight capture of a 3D scene compared with Neon, Arm indicates SVE2 was 10% better at FP32 and 23% better at FP16. Most of this is attributed to the gather-scatter addressing instruction efficiency in SVE2 versus Neon.

TCS23 finally drops support for AArch32 across the board, which was still supported in the prior-gen Cortex-A710 Armv9 core. The transition to 64-bit has been a decade long effort and required coordination with a wide variety of players from Google and its hardware partners, to various app store operators and application developers.

A 64-bit exclusive solution enhances security through its much larger address space, where techniques like address space randomization reduce the odds of a bad actor snooping on running workloads. 64-bit addresses also have spare space that can be used for signed pointers, MTE, and other uses.

Arm employees remarked that die area savings was not a significant consideration. Gutting 32-bit features only provides “single digit percentage” reductions. Nevertheless, it significantly reduces complexity, testing, and other requirements in addition to the aforementioned security advantages. These less tangible savings can be reinvested into performance and efficiency gains throughout the system to scale beyond where 32-bit architecture has otherwise plateaued.

Arm CPU Cores And Compute Cluster

Arm has been leveraging a three-tier CPU solution for a few generations now, replacing its big.LITTLE arrangement with the DynamIQ cluster. The X-series and A700-Series cores feature out-of-order processing to work ahead when the chip would otherwise stall waiting for memory. Tasks scheduled to the A500-series cores are not typically as time-sensitive, and so employ in-order processing to make every operation count.The efficiency curves of these designs complement each other well to provide suitable coverage for various operating points. The high-end X-series cores perform the heaviest lifting, the middle A700-series cores focus on sustained performance, and the little A500-series cores put efficiency above all else for background tasks.

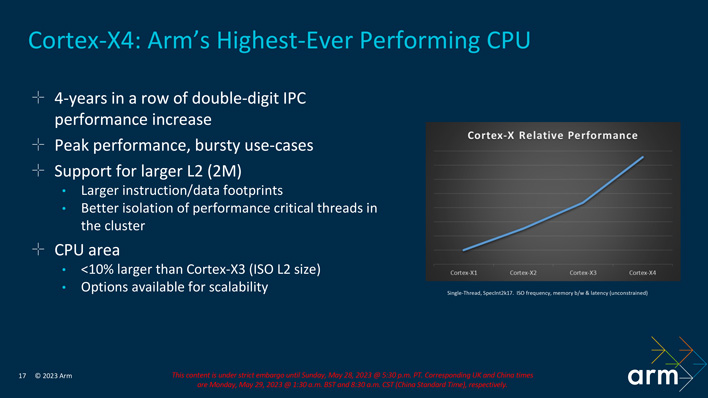

Cortex-X4 Heavy Lifting

This generation, the Cortex-X4 delivers the fourth consecutive year of double-digit IPC gains. The Cortex-X4 yields 15% better single-threaded performance, yet is also the most efficient X-series core Arm has designed to date. A key improvement is the doubling of L2 cache scalability up to 2MB per core. The additional cache reduces system memory calls and keeps the engine better fed.The Cortex-X4 is further fed by a redesigned instruction fetch delivery system. It is now a 10-wide core, delivering best-in-class bandwidth for high IPC workloads. The branch prediction itself has been further refined over the Cortex-X3 which particularly reduces stalls in real-world workloads. The graph below depicts less-predictable workloads on the left and moves towards more synthetic workloads on the right.

The number of ALUs has also grown from 6 to 8. The MCQ reorder buffer has also been expanded from 320x2 to 384x2 which allows more out of order instructions to be tracked and it now treats a load-store flush like a branch mispredict for faster handling.

Arm is claiming an average IPC speedup of 13% across a range of workloads, but it is again real-world scenarios that will see the greatest advantages. Synthetic benchmarks receive less benefits from the front-end and cache changes and as such see less improvement.

Cortex-A720 Sustained Performance

The Cortex-A720 is 20% more power efficient than the prior-generation Cortex-A715, and it features shorter and more efficient pipelines. At the front-end, it has removed one cycle from the branch mispredict pipeline, allowing swifter recovery in less-predictable real-world workloads.Alternatively, Arm offers an area-optimized configuration of the Cortex-A720. At a die size matching the Cortex-A78 Armv8 core, the area-optimized Cortex-A720 can provide 10% more performance and the newer Armv9.2 features.

Cortex-A520 Efficiency

The Cortex-A520 also strives to improve performance, but only while increasing efficiency. The LITTLE core has more importance in cost-constrained devices and is the chief driver of battery life during idle or low intensity usage.It retains the merged-core architecture used by the Cortex-A510 which places two cores in a complex with a single pool of shared or private L2 cache (up to 512KB) and SIMD engine (SVE2/Neon). It incorporates the QARMA3 PAC algorithm to reduce overhead to less than 1%, allowing it to capitalize on the latest security features without a performance penalty.

Interestingly, the Cortex-A520 reduces its ALU count from 3 to 2 from the prior-gen Cortex-A510. Alone, this would incur a performance penalty, but the savings in power and area allowed its engineers to push performance in other ways.

This culminates in 8% higher performance with a very slight reduction in power. Keeping performance constant, the Cortex-A520 can operate with 22% less power than the Cortex-A510.

DSU-120 Compute Cluster

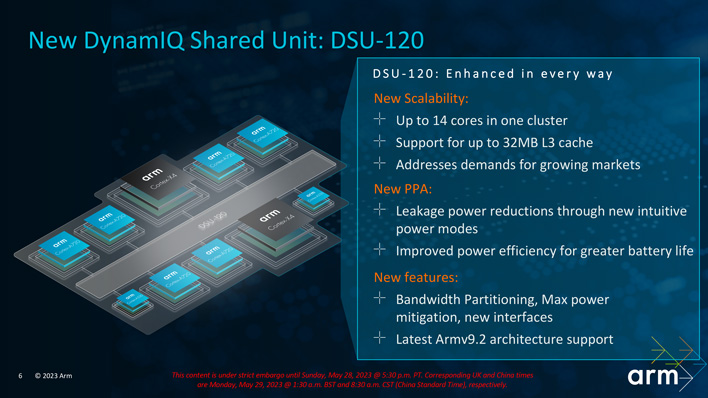

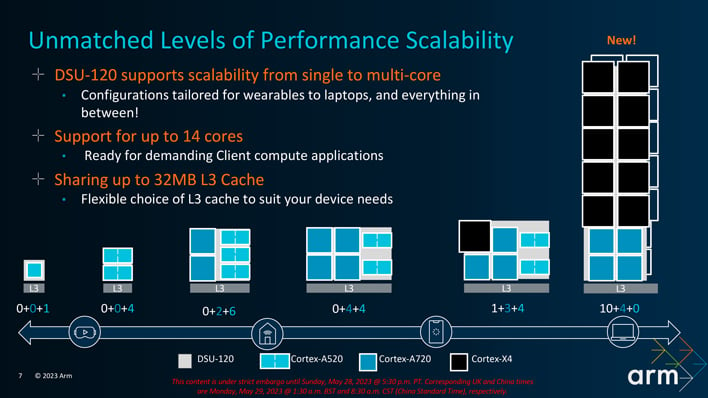

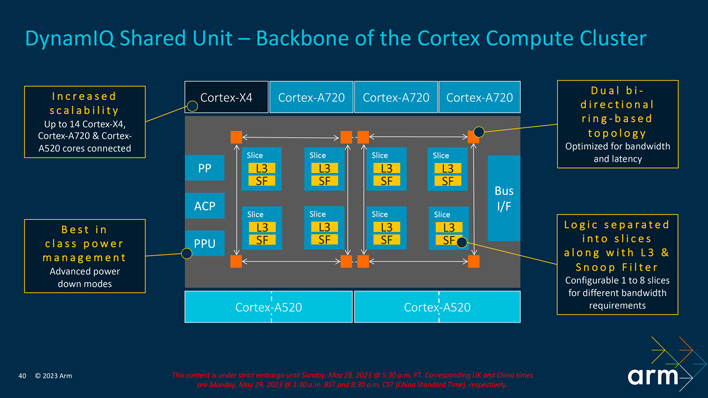

The cores are joined together in the DSU-120 (DynamIQ Shared Unit) cluster, which Arm says offers better scalability for even more cores and efficiency than prior revisions. Each DSU-120 cluster can house up to 14 cores in whatever configuration the customer’s design goals dictate. Each core in the cluster has its own private L2 cache, with a shared cluster-wide (up to 32MB) L3 pool.While a single DSU-120 cluster is more than sufficient for most mobile designs, customers do have the option to link multiple clusters together for even higher core counts, all linked across the high-bandwidth CoreLink coherent interconnect. There’s no practical upper limit to the number of clusters that can be chained together, but chips can only be made so large. At any rate, we don’t expect this to be a popular option for ARM-based solutions.

The DSU-120’s logic, L3 cache, and snoop filter are divided into slices (up to 8), linked with a dual bidirectional ring-based topology. This reduces latency by generally reducing hops and allows for higher bandwidth.

The DSU-120 further helps the system’s efficiency with a variety of powersaving modes. RAM retention places the L3 cache and snoop filter into a low power state that is quick to wake while the logic portion remains active. Alternatively, slice logic power down powers off the logic of each slice while the L3 cache and snoop filter remain active. These two modes can be combined as well but are controlled independently.

In addition, RAM powerdown can power off half or all of each L3 cache pool, but doing so dumps the contents of the powered off regions. This saves even more power when the total cache capacity is not needed. Slice powerdown fully shuts off slices (logic, L3 cache, and snoop filter) down to a single active slice.

In total, these power modes can reduce the DSU’s power draw by two-thirds during idle or low-intensity workloads.

Arm’s 5th Gen Graphics Architecture

The TCS23 is not limited to the CPU complex, but also incorporates the Immortalis-G720, Mali-G720, and Mali-G620 GPU options. Arm has dropped the Nordic names for its GPU architecture generations (e.g. Valhall), instead opting to simply refer to it as 5th Gen.Arm’s design goal is to allow more immersive games and real-time 3D apps, and to allow these experiences to run for longer without throttling or having to run to a power outlet. In particular, developers are creating scenes with greater geometric complexity, employing more high-dynamic range rendering, and memory system power is becoming a major contributor to thermal limits.

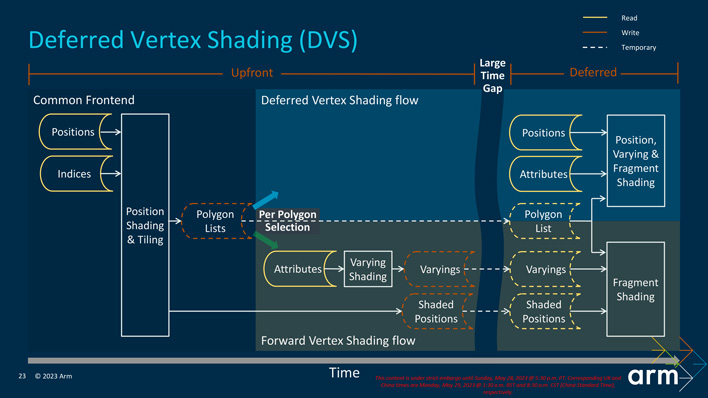

This last point is the largest target of Arm’s attention. The 5th Gen GPU architecture reduces memory bandwidth usage by up to 40%. This is primarily accomplished through larger buffers and the implementation of Deferred Vertex Shading (DVS) in the rendering pipeline.

At a high level, a visible scene of triangles is sorted into regions called tiles for processing. Some triangles can span tiles, which complicates matters and requires traditional upfront vertex shading pipelines which have to cache a large amount of data. Triangles that are wholly contained in a tile, however, can get away with performing minimal work up front to flatten the perspective, but can discard the data and only start vertex shading in the deferred phase of the pipeline.

DVS brings vertex and fragment shading together, reduces mis-caches, and only writes back to memory once which saves a lot of memory bandwidth.

Arm’s 5th Gen GPU architecture uses larger tile sizes (64x64 vs 32x32) which means there are more opportunities for the Tiler to select DVS. In addition, increased scene complexity makes for smaller triangles and therefore even more opportunities to defer the vertex shading and save memory bandwidth.

The new GPU architecture carries other improvements throughout the engine as well. It can perform variable rate shading at higher rates, performs faster work dispatch with extra working registers, and supports more fixed-function throughput for graphics. The ray tracing unit (RTU) is also on a power island now, which means less power leakage for most applications that do not engage the RTUs at all.

The three GPU models are configurable, but largely defined by core counts. The Immortalis-G720 has 10 or more cores, the Mali-G720 includes 6 to 9 cores, and the Mali-G620 is for designs with 5 or fewer cores. In addition to the 40% memory bandwidth reduction, these designs average 15% more sustained and peak performance over the prior generation.

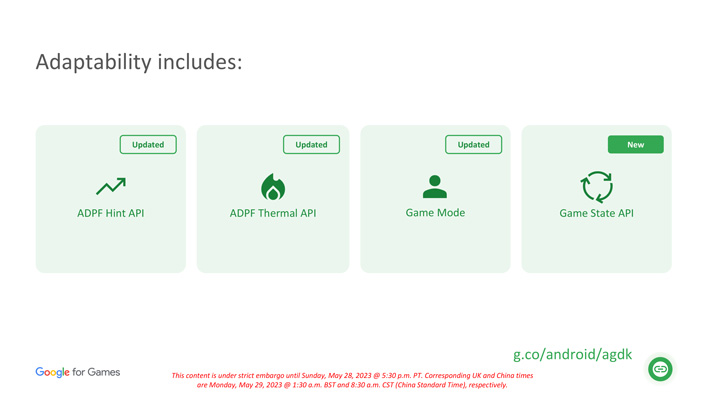

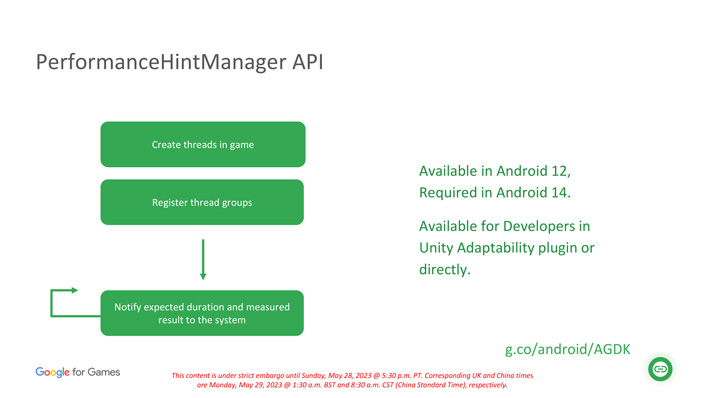

Android Dynamic Performance Framework

Finally, Arm brought in Scott Carbon-Ogden, Senior Project Manager of Android Games at Google, to discuss its integration with Google’s Android Dynamic Performance Framework (ADPF), which it says allows it to understand and respond to changing performance, thermal, and user situations in real time. This spans the ADPF Hint API, ADPF Thermal API, Game Mode, and Game State API, but chose to focus in on the first two.The ADPF Hint API is designed to go beyond the default Linux scheduler to both avoid a delay in ramping up performance and avoid wasting power once a workload has ended.

The API allows an application to better inform the operating system about a workload’s target and actual CPU duration so it can schedule more efficiently. The end result for the user can be a dramatic reduction in frame drops and some level of power savings.

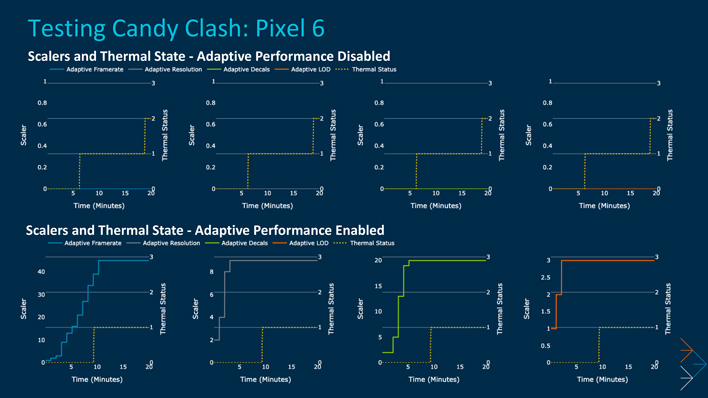

The ADPF Thermal API affords more ways to scale performance when thermal constraints are met than simply reducing framerate. Instead, the application can respond with other options like adaptive resolution, adaptive decals, or adaptive LOD (Level of Detail) to tune the user experience in less jarring ways.

With the example of Candy Clash given on the Pixel 6, the ADPF Thermal API resulted in 25% higher average FPS which is good, but the consistency of framerate shown is far more important. In addition, it resulted in up to an 18% reduction in CPU power which can either be redistributed to the GPU or preserved for longer gaming sessions.

Arm Total Compute Solution For 2023: Final Thoughts

The Arm TCS23 framework represents a solid foundation for its customers to develop next-generation SoC's with improved power, performance, and efficiency, but the company is already also looking to the road ahead.Arm shared this slide of its future roadmap. It depicts TCS24 with the Cortex-X4 successor's codename as Blackhawk, supported by the Chaberton and Hayes cores at the A7XX and A5XX levels, respectively. We also see Krake listed as the codename for its next-generation GPU. Arm's press release reads, "We’ve never been more committed to our CPU and GPU roadmap and over the next few years we’ll invest heavily in key IP, such as the Krake GPU and the Blackhawk CPU to deliver the compute and graphics performance our partners demand." We certainly look forward to seeing how Arm's IP continues to evolve, particularly as AI and machine learning is proving to be more and more disruptive.

AI comprised a surprisingly small portion of Arm's presentations this year. The company has not announced a follow-up to its NPU, instead electing to allow customers to differentiate with their own solutions. The generative and LLM AI surge in particular has resulted in shifts that make dedicated hardware solutions less attractive for now. Many of these workloads are being handled by traditional CPUs and GPUs at the edge currently, at least until the industry can recoalesce on new standards. Larger workloads, meanwhile, are being pushed to datacenters where the economies of scale are currently allowing greater efficiency.

Arm's partners are very excited for the potential of these next-generation designs, particularly for gaming and to enable new use-cases. Dave Burke, VP of Engineering at Android, said, "Together with the developer community, Android is committed to bringing the power of computing to as many people as possible. We're excited to see how Arm's new hardware advancements are adopted by vendors, with security and performance improvements that can benefit the Android ecosystem as a whole."

"Arm’s innovative 2023 IP, the Cortex-X4 and Cortex-A720, and Immortalis G720 have provided an excellent foundation for our next-generation Dimensity flagship 5G smartphone chip, which will deliver impressive performance and efficiency through groundbreaking chip architecture and technical innovations. Using Arm's industry-leading technologies, MediaTek Dimensity will enable users to do more at once than ever before and unlock incredible new experiences, longer gaming sessions, and excellent battery life," added Dr. JC Hsu, Corporate Senior Vice President and General Manager of Wireless Communications Business Unit at MediaTek.

We look forward to seeing the products this new IP can develop into, and we should begin seeing new solutions leveraging it enter the market sometime next year.