AMD Radeon RX 6500 XT Only Supports PCIe 4.0 x4 But Does It Matter?

Did you hear about the Radeon RX 6500 XT? AMD announced the little video card on Tuesday at its pre-CES show. The company didn't reveal many specifications about the new card, but thanks to earlier leaks as well as some clever deduction, we already know most of the relevant details.

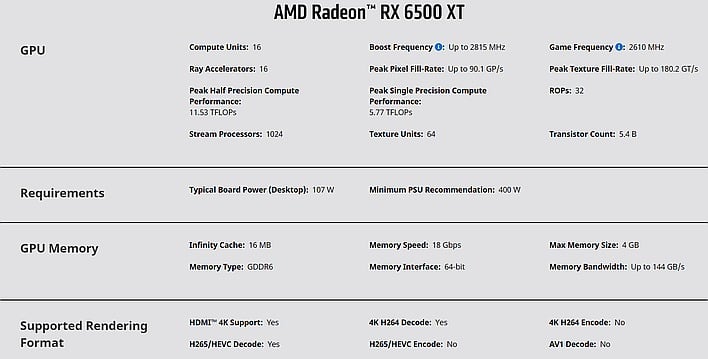

The card is based on the tiny Navi 24 GPU, codenamed Beige Goby. That chip comes with eight RDNA 2 WGPs (equivalent to 16 old-school CUs, giving it 1,024 stream processors), a boost clock of up to 2.6GHz, and 4GB of GDDR6 memory connected across a quite slim 64-bit bus. Those specs make it quite a small GPU, but specifications pages for products based on the full-sized Beige Goby indicate that it will require an 8-pin CPU power connector.

Those same product pages also reveal another curious detail about the Radeon RX 6500 XT: it will only use four lanes of PCI Express connectivity to the host system. That's right; the host-to-GPU interface for the Radeon RX 6500 XT is PCIe 4.0 x4. That interface offers some 8GB/s bandwidth between CPU and GPU, as long as the host system supports PCIe 4.0.

Therein is the detail that has some folks concerned. Sure, PCIe 4.0 x4 is broadly equivalent to PCIe 3.0 x8 or PCIe 2.0 x16, and the entry-level Radeon RX 6500 XT is about as fast as the top GPUs we were using back when that was standard. However, the card physically only has four lanes connected to the GPU; you can clearly see this in pictures of the card from the product page for the ASRock Radeon RX 6500 XT Phantom Gaming. It's likely that the minuscule GPU chip itself only has a four-lane-wide interface.

The thing is, gaming workloads aren't very sensitive to host bus throughput, for the most part. Because this card is meant for entry-level gaming, even PCIe 3.0 x4 is almost assuredly still plenty of bandwidth. So saying, the inevitable performance drop is unlikely to be significant. If we take a peek at TechPowerUp's last PCI Express Scaling article, those tests were done using a GeForce RTX 3080, and even that massive GPU only really starts to see any fall-off when you drop all the way down to PCIe 1.1 x8 (aside from a couple of outliers). The effect will be much less pronounced on the little Beige Goby GPU.

Confusing the issue, you can find lots of benchmark results around the web from amateur reviewers that show PCIe x4 clearly having a huge performance detriment compared to x16. Unfortunately, these well-intentioned results are mostly just misleading. Often, these tests are performed by plugging a GPU into the spare PCIe x4 slot at the bottom of a motherboard. Those slots generally connect to the system chipset, not directly to the CPU, and that means huge latency and bandwidth penalties as the GPU's traffic has to contend with I/O from every other device connected to the chipset. It's not the x4 interface that is the issue, but rather, the bus contention and extra step through the chipset.

RDNA 2 is a famously efficient GPU architecture, but Beige Goby is quite a small GPU. Heck, the 3dfx Voodoo Banshee had a memory bus twice as wide, at 128 bits—although it didn't have 14Gbps GDDR6 memory or Infinity Cache, of course. The fillrate specifications for the RX 6500 XT put it in RX 590, RX 5600, or RTX 2060 territory. It will be fascinating to see what AMD's littlest RDNA 2 can do.