NVIDIA Says Go With A GeForce RTX GPU If You Want A Premium AI PC

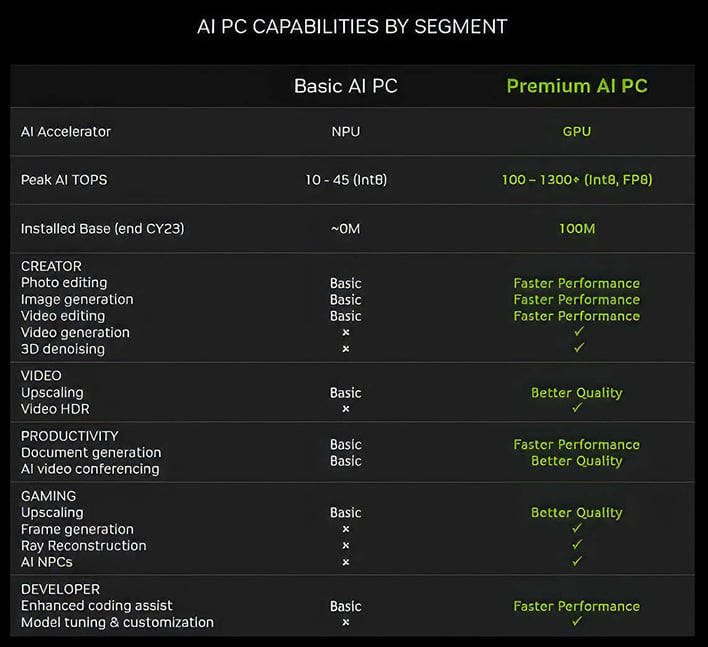

The same architecture that lets a GPU fill in zillions of polygons per second is the same that lets AI accelerators churn through input tokens at lightning speed. So, a powerful GPU can handle heavy AI workloads that would choke the NPU included in the latest CPUs. According to NVIDIA, those components can only manage 10-45 TOPS (trillions of operations per second) of AI processing, which limits the quality and speed of AI outputs. NVIDIA's GeForce RTX 4090, on the other hand, is capable of up to 1,300 TOPS.

NVIDIA started this marketing push at an event dubbed "NVIDIA RTX for Windows AI," which may be a preview of CEO Jensen Huang's keynote at next month's Computex event. The company notes that the install base of "Premium AI PCs" is already vastly larger than "basic AI," with about 100 million RTX-based machines. Computers with integrated NPUs are just beginning to appear, and even when they're more common, NVIDIA contends you won't want to use them for local AI processing.

The talk included a few examples of how a PC running NVIDIA RTX can put even the most capable NPUs to shame. A Stable Diffusion image generation job that takes Apple's custom M3 chip over a minute could be completed on the RTX 4090 in just a few seconds. Tasks like video generation and 3D denoising aren't even possible with an NPU, and you get better results across the board when upscaling content with RTX.

Unless you have some programming know-how, there are still very few AI tools that run locally—even on systems with NPUs and RTX GPUs, data is usually exported to the cloud where powerful servers can do the heavy lifting. Even the mobile-optimized Galaxy AI skips the NPU in Qualcomm's latest Snapdragon chips and goes straight to the cloud. Promoting RTX as a premium AI platform could indicate the company plans to deliver more on-device AI capabilities. Indeed, the company just rolled out ChatRTX, which can run generative AI on NVIDIA GPUs without reaching out to a cloud service.

If you've got an RTX card for games, you might get even more value from it soon.