Intel Benchmarks Show Habana Gaudi2 AI Machine Learning Chip Trouncing NVIDIA's A100

After unveiling its second generation Habana Gaudi2 AI processor last month with some preliminary performance figures, Intel has followed suit with internally run benchmarks showing its fancy accelerator outpacing NVIDIA's A100 GPU. According to Intel, its results demonstrate clear leadership training performance compared to what the competition has to offer.

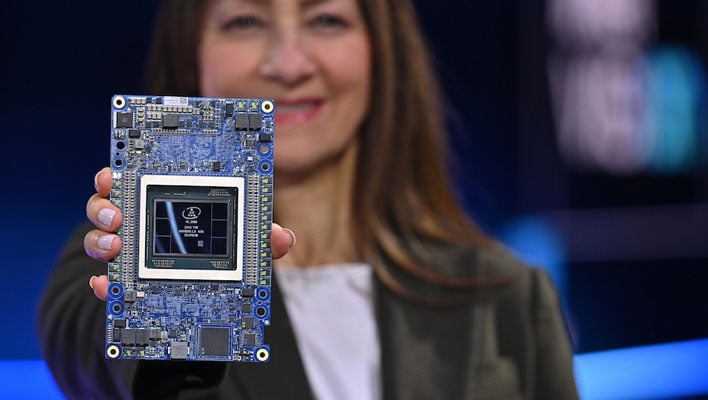

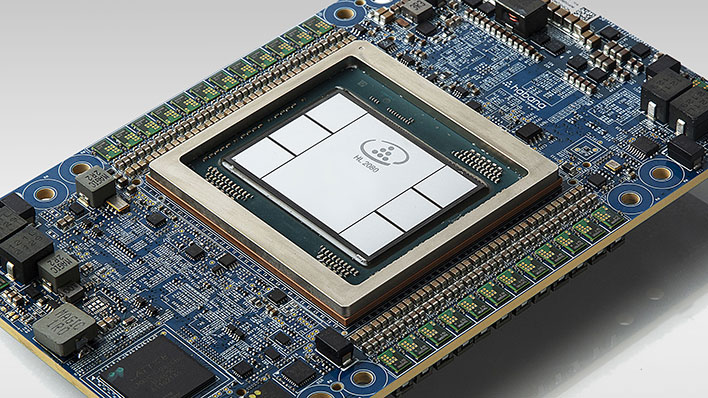

Gaudi2 is a big slab silicon, as shown in the image above. The Guadi2 processor in the center is flanked by six high bandwidth memory tiles on outside, effectively tripling the in-package memory capacity from 32GB in the previous version to 96GB of HBM2E in the current iteration, serving up 2.45TB/s of bandwidth.

Intel's latest benchmarks show what it sees as "dramatic advancements in time-to-train (TTT)" over the first-gen Gaudi chip. Even more importantly (for Intel), its latest MLPerf submission highlight several performance wins over NVIDIA's A100-80G for eight accelerators on vision and language training models.

In case you missed it, Intel acquired Habana Labs for $2 billion in late 2019, to give it a boost in a high stakes race against NVIDIA in AI and ML training. The data center is where the big dollars come from, and to that point, NVIDIA's data center revenue topped its gaming revenue for the first time last quarter ($3.75 billion compared to $3.62 billion).

Intel and NVIDIA both have massive vested interests in dominating the data center. MLPerf is an industry standard benchmark that NVIDIA often trumpets its own performance wins, so it's a fair playground. It's also easy to see why Intel is keen on flexing what it's been able to accomplish with its Gaudi2 processor.

Intel Habana Gaudi2 Versus NVIDIA A100 In MLPerf

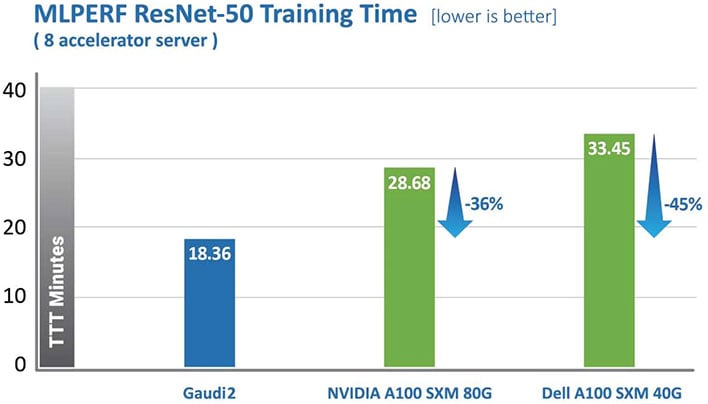

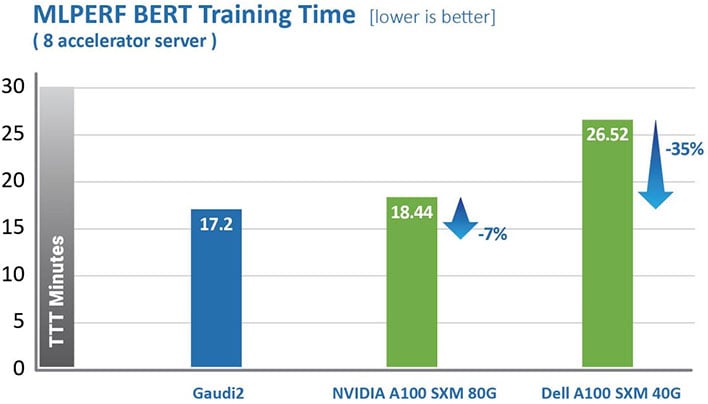

Two of the highlights Intel showed off were ResNet-50 and BERT training times. ResNet is a vision/image recognition model while BERT is for natural language processing. These are key areas in AI and ML, and both are industry standard models.

In an eight-accelerator server, Intel's benchmarks highlight up to 45 percent better performance in ResNet and up to 35 percent in BERT, compared to NVIDIA's A100 GPU. And compared to the first-gen Gaudi chip, we're looking at gains of up to 3X and and 4.7X, respectively.

"These advances can be attributed to the transition to 7-nanometer process from 16nm, tripling the number of Tensor Processor Cores, increasing the GEMM engine compute capacity, tripling the in-package high bandwidth memory capacity, increasing bandwidth and doubling the SRAM size," Intel says.

"For vision models, Gaudi2 has a new feature in the form of an integrated media engine, which operates independently and can handle the entire pre-processing pipe for compressed imaging, including data augmentation required for AI training," Intel adds.

The Gaudi family compute architecture is heterogeneous and comprises two compute engines, those being a Matrix Multiplication Engine (MME) and a fully programmable Tensor Processor Core (TPC) cluster. The former handles perations that can be lowered to Matrix Multiplications, while the latter is tailor-made for deep learning operations to accelerate everything else.

What Intel is showing are some huge wins in MLPerf that can be translated to real-world workloads. It's impressive, though bear in mind that the A100 is technically going to be legacy silicon when Hopper H100 hits. NVIDIA hasn't submitted MLPerf results for its H100 accelerator yet, and it will be interesting to see how things shake out when it does.