Intel Arc A770 And A750 Limited Edition Review: Putting Alchemist To The Test

Intel Arc A750 And A770 Limited Edition: Architecture And Features Spotlight

We have already covered the Intel Arc Alchemist GPU and the Xe-HPG architecture it is based on on a few previous occasions, but since this launch article is likely to receive a lot of eyeballs, we thought it best to quickly summarize some of that coverage again here.

As many of you are likely aware, the Intel Arc A750 And A770 Limited Edition GPUs leverage Intel's Xe-HPG (High Performance Gaming) discrete graphics architecture, which was designed to scale down to thin-and-light laptops and up to high-performance gaming and content creation desktops, which is where these two cards will reside.

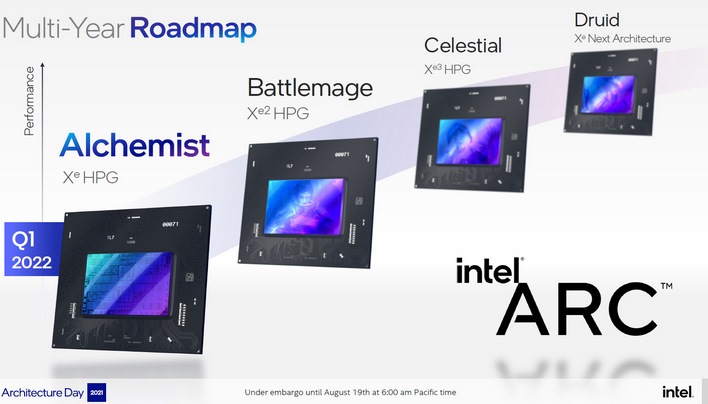

This first wave of Arc A-series cards are based on GPUs codenamed Alchemist, though follow-on Battlemage, Celestial and Druid GPU architectures are also currently in the works. In fact, the bulk of Intel's GPU engineers are already working on Battlemage, as confirmed just last night in this video. Note the strategic A-B-C-D progression, each with names that harken to classic game character classes. We're showing you Alchemist A-series cards today. Next-gen Battlemage-based cards will be the B-series, Celestial will make up the C-series, and so on.

As many of you are likely aware, the Intel Arc A750 And A770 Limited Edition GPUs leverage Intel's Xe-HPG (High Performance Gaming) discrete graphics architecture, which was designed to scale down to thin-and-light laptops and up to high-performance gaming and content creation desktops, which is where these two cards will reside.

Entry level discrete desktop cards and mobile Intel Arc GPUs based on the scaled-down ACM-G11 chip have already shipped in a handful of notebooks in various regions. And mainstream Arc A380 desktop cards (also based on ACM-G11) launched in China a while back, though those cards are available in the U.S. now too. The more powerful Intel Arc A750 And A770 Limited Edition cards we're showing you here, which target the high-volume sweet-spot of the desktop gaming GPU market, are based on the larger ACM-G10.

Intel Arc Discrete GPU Silicon Explored

The Intel ACM-G10 and ACM-G11 are based on the same architecture, but target very different applications and use cases. The former is the larger of the two chips, which packs up to 32 Xe cores, 32 ray tracing units, 16MB of L2 cache, up to a 256-bit wide memory bus, and support for PCIe 4.0 x16.

ACM-11, however, is one-fourth the size and is equipped with up to 8 Xe cores, 8 ray tracing units, 4MB of L2 cache, up to a 96-bit memory bus, and 8 lanes of PCIe 4.0.

These two chips are the foundation for five graphics solutions spanning three segmented performance tiers, including Arc 3, Arc 5, and Arc 7. The numbered branding is similar to what Intel has used with its Core i3, Core i5, Core i7, and Core i9 CPUs, each with its own subset of GPU/processor models. As it relates to Arc GPUs specifically, Arc 3 targets "Enhanced Gaming" scenarios, Arc 5 is the "Advanced Gaming" tier, and Arc 7 is for "High Performance Gaming."

A Closer Look At The Intel Arc GPU Architecture

Intel's Xe-HPG is a more feature-rich and capable graphics architecture than anything Intel has previously launched. It supports DirectX 12 Ultimate with Variable Rate Shading (VRS), and has dedicated hardware engines for ray tracing (compatible with both DXR and Vulkan RT) and AI acceleration.

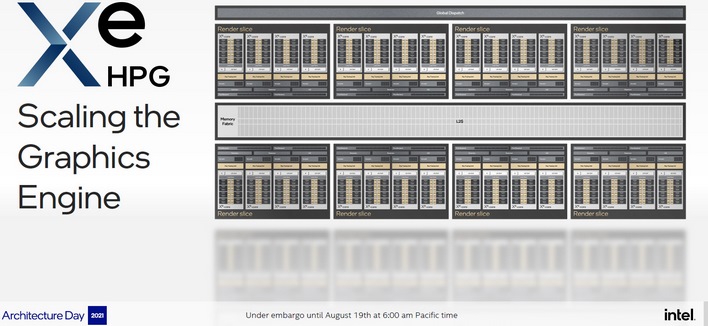

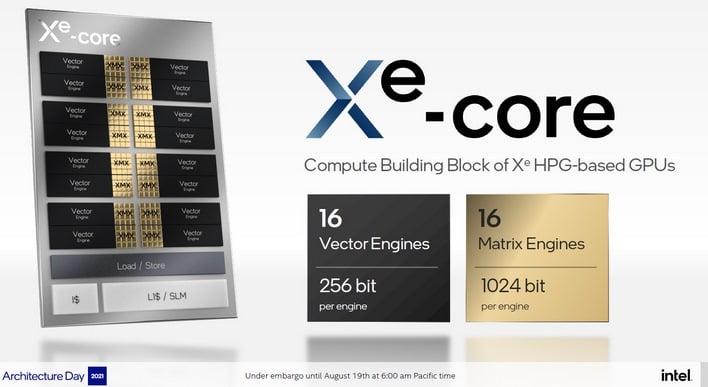

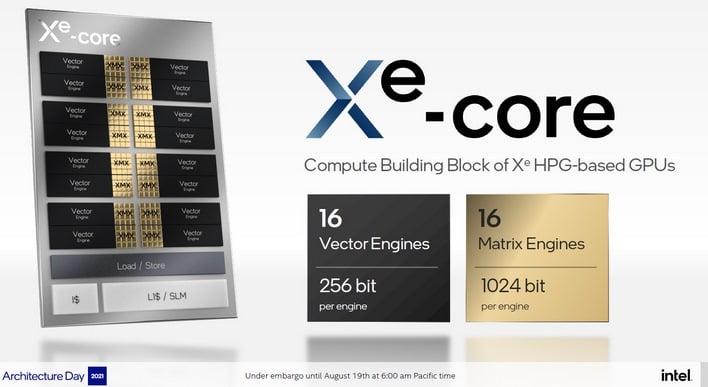

Intel segments its Arc discrete GPUs into cores and slices. The cores are the foundation of the design and they are grouped together into slices. There is 1 ray tracing unit per core (4 per slice), and 4 cores per slice, which equates to 32 cores and 32 ray tracing units in a fully-enabled 8-slice ACM-G10. The smaller ACM-G11 will have only 8 cores / ray tracing units.

Each Xe core is outfitted with 16 256-bit vector engines and 16 1024-bit Matrix Engines. There is 192K of shared L1 cache per Xe core, which can be dynamically partitioned as L1 cache or Shared Local Memory (SLM) depending on the workload.

The Xe-HPG vector engines have an improved ALU design with a dedicated FP execution port and a shared Int/EM execution port. Also on board is a dedicated XMX Matrix engine, which is particularly well suited for AI-related workloads. The XMX Matrix engine is capable of 128 FP16/BF16 ops/clock, 256 Int8 ops/clock, or 512 Int4/Int2 ops/clock. It is the XMX engines that accelerate Intel's XeSS upscaling technology.

The GPUs will be manufactured on TSMC’s N6 process node, which is a marginal improvement over N7 in terms of transistor density.

Intel’s discrete Arc GPUs also feature a leading-edge media engine, which supports all major codecs and was the first of its kind to feature hardware encode acceleration for AV1, though NVIDIA has added dual AV1 encoders into its 8th Gen Media engine incorporated into the upcoming RTX 40 series as well. Back at Architecture Day last year, Intel talked about an AI-accelerated video enhancement technology capable of high-quality, hardware upscaling of low-resolution video content to 4K resolution, and through a collaboration with Topaz Labs, that tech is supported in the company’s Video Enhance AI application. You can see it action here...

The AV1 acceleration in Arc’s media engine is a real advantage over competing mainstream GPU solutions (NVIDIA's will only reside in high-end cards for now). AV1 is capable of producing higher-quality video at similar compression levels to H.265, or similar quality video with even higher compression, and thus smaller files. That means AV1 encoding can reduce bandwidth consumption with higher-quality output, which is ideal for game streaming and other types of video media streaming, or to simply reduce the storage space necessary to store video files.

As you can see in the demo above, AV1 encoding produces better looking output than existing codecs.

Although this is a new feature exclusive to Intel at the moment, many ISVs are already supporting the technology. FFMPEG, Handbrake, Premiere Pro, Xsplit, and Davinci Resolve all already support the media engine in Arc, with more applications sure to follow, especially with NVIDIA coming on-board soon.

Intel segments its Arc discrete GPUs into cores and slices. The cores are the foundation of the design and they are grouped together into slices. There is 1 ray tracing unit per core (4 per slice), and 4 cores per slice, which equates to 32 cores and 32 ray tracing units in a fully-enabled 8-slice ACM-G10. The smaller ACM-G11 will have only 8 cores / ray tracing units.

Each Xe core is outfitted with 16 256-bit vector engines and 16 1024-bit Matrix Engines. There is 192K of shared L1 cache per Xe core, which can be dynamically partitioned as L1 cache or Shared Local Memory (SLM) depending on the workload.

The Xe-HPG vector engines have an improved ALU design with a dedicated FP execution port and a shared Int/EM execution port. Also on board is a dedicated XMX Matrix engine, which is particularly well suited for AI-related workloads. The XMX Matrix engine is capable of 128 FP16/BF16 ops/clock, 256 Int8 ops/clock, or 512 Int4/Int2 ops/clock. It is the XMX engines that accelerate Intel's XeSS upscaling technology.

The GPUs will be manufactured on TSMC’s N6 process node, which is a marginal improvement over N7 in terms of transistor density.

Intel’s discrete Arc GPUs also feature a leading-edge media engine, which supports all major codecs and was the first of its kind to feature hardware encode acceleration for AV1, though NVIDIA has added dual AV1 encoders into its 8th Gen Media engine incorporated into the upcoming RTX 40 series as well. Back at Architecture Day last year, Intel talked about an AI-accelerated video enhancement technology capable of high-quality, hardware upscaling of low-resolution video content to 4K resolution, and through a collaboration with Topaz Labs, that tech is supported in the company’s Video Enhance AI application. You can see it action here...

The AV1 acceleration in Arc’s media engine is a real advantage over competing mainstream GPU solutions (NVIDIA's will only reside in high-end cards for now). AV1 is capable of producing higher-quality video at similar compression levels to H.265, or similar quality video with even higher compression, and thus smaller files. That means AV1 encoding can reduce bandwidth consumption with higher-quality output, which is ideal for game streaming and other types of video media streaming, or to simply reduce the storage space necessary to store video files.

As you can see in the demo above, AV1 encoding produces better looking output than existing codecs.

Although this is a new feature exclusive to Intel at the moment, many ISVs are already supporting the technology. FFMPEG, Handbrake, Premiere Pro, Xsplit, and Davinci Resolve all already support the media engine in Arc, with more applications sure to follow, especially with NVIDIA coming on-board soon.

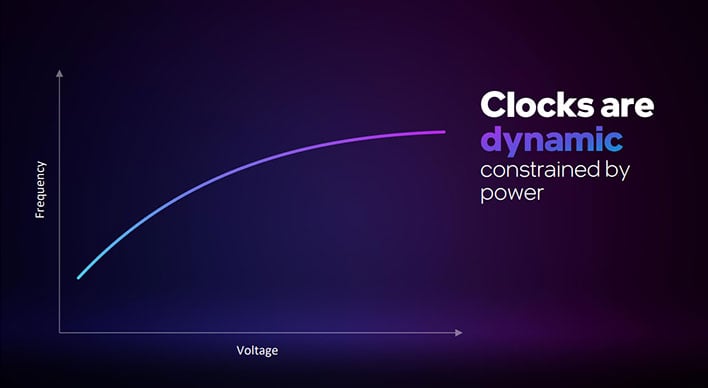

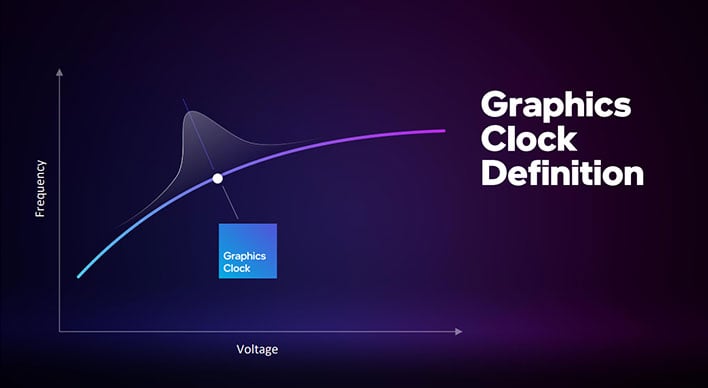

Intel Arc GPU Dynamic Clocks And Voltages

All of Intel's Arc GPUs employ dynamic clocks within the frequency voltage curve, determined by the power consumption, temperature, and utilization at any given moment in time. Additionally, the graphics clock you see reported in the specs is roughly the average clock delivered within a target TDP, while running a typical workload (games and other applications). In the real world, similar to competing GPUs, the actual clock will fluctuate higher or lower than the typical game clock.

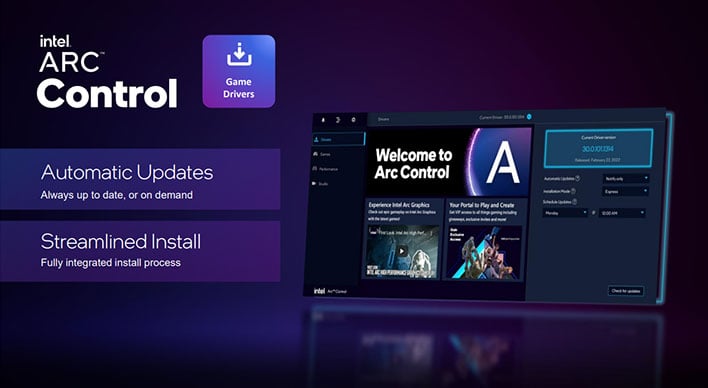

Intel has also revamped its software and drivers in preparation for Arc. To that end, the company has introduced its Arc Control suite, which is an all-in-one software experience to streamline various tasks, manage driver control toggles, and house system and GPU monitoring tools. Arc Control serves up real-time performance metrics like temps and utilization, it serves as a dashboard for broadcasting to third party platforms, and it makes fetching driver updates easy and seamless (Intel is committing to day-0 driver releases for major titles, as well). There are also performance tuning controls, which we'll show you a little later in the overclocking section.

Arc Control is easily accessible via an overlay that can be brought up using hotkeys, similar to what AMD has done with its drivers and NVIDIA offers with GeForce Experience. Arc Control also supports Intel 12th Gen integrated graphics engines, so both the iGPU and dGPU can be managed from within a single interface in laptops or systems that may be using both.

Arc Control is easily accessible via an overlay that can be brought up using hotkeys, similar to what AMD has done with its drivers and NVIDIA offers with GeForce Experience. Arc Control also supports Intel 12th Gen integrated graphics engines, so both the iGPU and dGPU can be managed from within a single interface in laptops or systems that may be using both.

XeSS And New Features And Tools Coming With Intel's Arc Series

One of the main features Intel has been touting since it first unveiled its discrete Arc GPUs is XeSS, or Xe Super Sampling. XeSS is akin to NVIDIA’s DLSS and AMD’s FSR, in that it is a high-quality upscaler designed to improve performance and enhance the image quality of game frames initially rendered at lower resolutions. XeSS leverages Arc’s XMX Matrix engines for AI neural network processing on neighboring pixels to reconstruct and upscale frames from lower resolution game engine input frames, with better edge and texture detail than simply running the game at a lower native resolution. XeSS is open source and works with competing GPUs as well, even those without dedicated XMX engines.

Intel claims the technology can deliver a greater than 2X performance boost with Arc’s built-in XMX Matrix engines, but as mentioned it can also work on legacy and competitive GPUs that support the DP4a instruction set. Note, however, that XeSS uses different models and even when using similar settings, XeSS will ultimately output frames at different quality levels whether using XMX or DP4a. We have some comparison screenshots later in this article to illustrate.

Arc’s display engine features native support for HDMI 2.0b and DP1.4, but the design is also DP 2.0 10G ready provided board partners install an additional power management IC (Intel's LE boards have the PMIC installed). The display engine can handle 2 x 8K60 HDR displays or 4 x 4K120 HDR displays, with refresh rates up to 360Hz at lower resolutions. The display engine also supports adaptive refresh rates, i.e. Adaptive Sync, over its DisplayPort connections, though we're not clear if it will work over HDMI 2.0b due to the in-line PMIC which may not pass through the necessary data. We'll update as we receive clarity on that point.

Intel's Arc GPUs also offer a couple of new display sync modes, namely Speed Sync and Smooth Sync. Vertical Sync, or V-Sync, is a legacy technology that synchronizes a GPU’s output to a display’s refresh rate, which was historically 60Hz. Enabling V-Sync ensures what is being output from the GPU is in-sync with a display’s capabilities and there will be no display output-related visual anomalies, like tearing, due the GPU and monitor being out of sync. But enabling V-Sync typically introduces a significant input latency penalty, which is a bummer for fast-twitch and most competitive games.

Disabling V-Sync, and letting a GPU output frames as fast as it can eliminate that latency, but can in turn introduce screen tearing if the GPU is outputting frames faster than a monitor can display them. Both Speed Sync and Smooth Sync aim to eliminate or minimize screen tearing using different methods.

Speed Sync works by outputting only completed frames to the display. This means there will be no tearing and the GPU can run at its full speed, but partial frames will be discarded. With Smooth Sync, however, the GPU behaves as if V-Sync is disabled, but the hard lines at the boundaries where screen tearing would typically occur is dithered, and blended with adjacent edges. The screen tearing is technically still there, but with the hard edges blended and smoothed out, it is much less visually jarring. Although Smooth Sync will do some processing on the vast majority of frames being output to the display, it incurs a very slight performance penalty, according to Intel—somewhere in the neighborhood of 1%. Unfortunately, due to time constraints we didn't get to test it fully just yet, but we will in a future article. (Or perhaps an update to this piece).

Intel claims the technology can deliver a greater than 2X performance boost with Arc’s built-in XMX Matrix engines, but as mentioned it can also work on legacy and competitive GPUs that support the DP4a instruction set. Note, however, that XeSS uses different models and even when using similar settings, XeSS will ultimately output frames at different quality levels whether using XMX or DP4a. We have some comparison screenshots later in this article to illustrate.

Arc’s display engine features native support for HDMI 2.0b and DP1.4, but the design is also DP 2.0 10G ready provided board partners install an additional power management IC (Intel's LE boards have the PMIC installed). The display engine can handle 2 x 8K60 HDR displays or 4 x 4K120 HDR displays, with refresh rates up to 360Hz at lower resolutions. The display engine also supports adaptive refresh rates, i.e. Adaptive Sync, over its DisplayPort connections, though we're not clear if it will work over HDMI 2.0b due to the in-line PMIC which may not pass through the necessary data. We'll update as we receive clarity on that point.

Intel's Arc GPUs also offer a couple of new display sync modes, namely Speed Sync and Smooth Sync. Vertical Sync, or V-Sync, is a legacy technology that synchronizes a GPU’s output to a display’s refresh rate, which was historically 60Hz. Enabling V-Sync ensures what is being output from the GPU is in-sync with a display’s capabilities and there will be no display output-related visual anomalies, like tearing, due the GPU and monitor being out of sync. But enabling V-Sync typically introduces a significant input latency penalty, which is a bummer for fast-twitch and most competitive games.

Disabling V-Sync, and letting a GPU output frames as fast as it can eliminate that latency, but can in turn introduce screen tearing if the GPU is outputting frames faster than a monitor can display them. Both Speed Sync and Smooth Sync aim to eliminate or minimize screen tearing using different methods.

Speed Sync works by outputting only completed frames to the display. This means there will be no tearing and the GPU can run at its full speed, but partial frames will be discarded. With Smooth Sync, however, the GPU behaves as if V-Sync is disabled, but the hard lines at the boundaries where screen tearing would typically occur is dithered, and blended with adjacent edges. The screen tearing is technically still there, but with the hard edges blended and smoothed out, it is much less visually jarring. Although Smooth Sync will do some processing on the vast majority of frames being output to the display, it incurs a very slight performance penalty, according to Intel—somewhere in the neighborhood of 1%. Unfortunately, due to time constraints we didn't get to test it fully just yet, but we will in a future article. (Or perhaps an update to this piece).