Technophilosopher Claims AI Game Characters Have Feelings And Should Have Rights, Wait What?

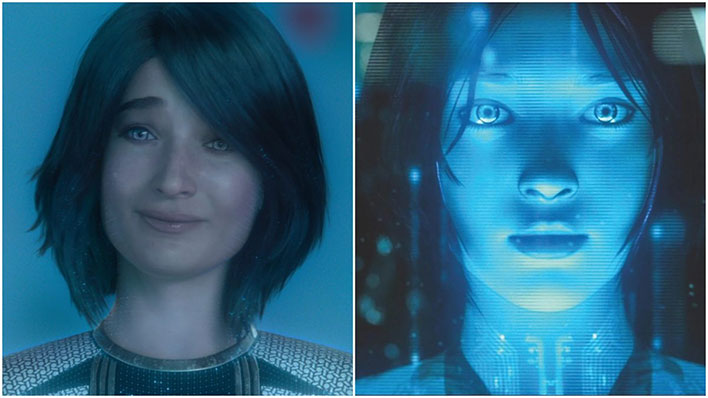

Did you ever stop and consider if that random bystander in Grand Theft Auto V had a family before you beat him to a pulp? Or what about the many guards in The Elder Scrolls V: Skyrim, all of whom took an arrow to the knee—did you ask any of them if they need assistance? Of course not, because NPC aren't actual living things with emotions and consciousness. Not yet, anyway, but as AI continues to advance, it could pose some interesting scenarios in games and in the metaverse.

So suggests David Chalmers, a technophilosopher professor at New York University who had an interesting chat about AI with PC Gamer. AI is always a hot topic these days, but even more so after a Google engineer was put on paid administrative leave for, according to him, raising AI ethics concerns within the company.

In cased you missed that whole brouhaha, engineer Blake Lemoine has come to the conclusion that Google's Language Model for Dialogue Applications, or LaMDA, is sentient and has a soul. His argument is based various chats he had with LaMDA, in which the AI served up responses that appeared to show self-reflection, a desire to improve, and even emotions.

AI is certainly getting better at creating the illusion of sentience and has come a long way since the days of Dr. Sbaitso, for those of you who are old and geeky enough to remember the AI speech synthesis program Creative Labs released with its Sound Blaster card in the early 1990s. But could AI NPCs truly achieve consciousness one day? That's one of the questions PCG posed to Chalmers.

"Could they be conscious? My view is yes," Chalmers said. "I think their lives are real and they deserve rights. I think any conscious being deserves rights, or what philosophers call moral status. Their lives matter."

That's an interesting and certainly controversial viewpoint. There's also an inherent conundrum that goes along with this. If AI advancements lead to conscious NPCs, then is it even ethical to create them in games? Think about all the horrible things we do to NPCs in games, that we wouldn't (or shouldn't) do to real people. And then there is question of repercussions if, as Chalmers suggests, NPCs deserve rights.

"If you simulate a human brain in silicon, you'll get a conscious being like us. So to me, that suggests that these beings deserve rights," Chalmers says. "That's true whether they're inside or outside the metaverse... there's going to be plenty of AI systems cohabiting in the metaverse with human beings."

The metaverse makes the situation even murkier, as even when you're not logged in and participating, NPCs will still be moseying about and doing whatever it is they do. You know, sort of like the movie Free Guy.

What do you think, will AI ever truly achieve conscious and have feelings as Chalmers suggests? Sound off in the comments section below.