Should AI Chatbots Write News Articles? Google's Former Safety Boss Sounds Off

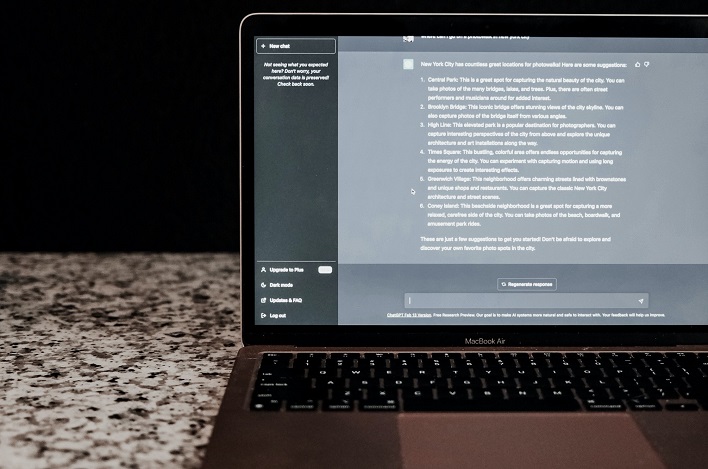

It is no secret that some news outlets are already taking advantage of AI in order to write some its content. CNET has been found using such technology and then having to go back and correct factual errors. There are also websites out there that are fully dependent upon AI-generated articles, known as content farms. As it becomes harder for even mainstream news outlets to churn a profit these days, the allure of being able to replace human writers who cost more money is becoming increasingly attractive to some.

When posed with the question of the dangers or challenges Narayan sees with recent efforts by news organizations to use content generated by AI, he pointed out that it can be difficult to detect stories that are written fully by AI and which are not. He added, "That distinction is fading."

Narayan feels it is important to have some principles, such as letting users know when an article has been generated using AI. A second principle he touched on was having a competent editorial team that can prevent "hallucinations" from making it to print/post. This would require editors to check for factual errors, or for things such as a political slant, just as they should for a human writer. In short, publications cannot simply take AI at its word.

Just as it is with teachers trying to discern whether or not a student has used something like ChatGPT to write an essay, Narayan says it is getting to where it is extremely difficult to discern when a news outlet has used AI software to fully write an article or not. He poses some ethical questions as well, such as "Who has that copyright, who owns that IP?"

While Narayan does not see anything wrong with using AI to write articles, he does believe there needs to be transparency. He remarked, "It is important for us to indicate either in a byline or in a disclosure that content was either partially or fully generated by AI. As long as it meets your quality standards or editorial standards, why not?"

To be fully transparent, this was completely written by a human.