Researchers Hack Echo, Google Home, HomePod Speakers With Cheap Lasers

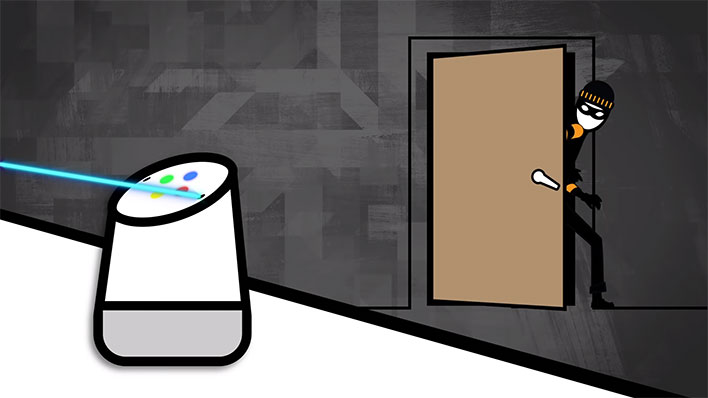

It's bad enough we have to worry about sharks with frickin' laser beams attached, but now we have to be vigilant against the threat lasers present to smart home speakers and other devices with digital assistants. How so? Security researchers at the University of Electro-Communications in Tokyo and at the University of Michigan warn that relatively cheap laser points can be modded to inject inaudible and invisible commands into voice assistants.

This method of hacking affects all of the popular voice assistants, including Google Assistant, Amazon Alexa, Apple Siri, and Facebook Portal.

"The implications of injecting unauthorized voice commands vary in severity based on the type of commands that can be executed through voice. As an example, in our paper we show how an attacker can use light-injected voice commands to unlock the victim's smart-lock protected home doors, or even locate, unlock and start various vehicles," the researchers say.

As outlined in the video above, smart speakers and other voice-controlled devices convert audible commands into electrical signals, which then then get processed and carried out. On the surface, this seems rather harmless—a robber sitting outside your house would have to yell loudly to penetrate the walls and windows.

However, the same commands can be configured in a laser pointer or even a flashlight. These are not theoretical threats, either. The researchers who are, uh, shining a light on the topic demonstrated sending a laser command to a Google Home speaker instructing it to open a garage door. Have a watch...

In the example above, the demonstration has the laser positioned rather closely to the speaker. However, they also were able to compromise a voice assistant from more than 350 feet away by using a telephoto lens to focus the laser.

"This opens up an entirely new class of vulnerabilities," Kevin Fu, an associate professor of electrical engineering and computer science at the University of Michigan, told The New York Times. "It’s difficult to know how many products are affected, because this is so basic."

Fortunately, there is no evidence of light-based attacks being used on smart speakers on the wild. As the market for smart devices continues to grow, however, this could be become a real concern, at least on current generation hardware and software. The researchers say they are collaborating with Amazon, Apple, Google, and others to come up with defensive measures against this sort of attack.