OpenAI's Complex Visual AI System Tripped Up By Stray Text And Human Biases

OpenAI’s CLIP, a general-purpose vision system, is gaining attention this week for some rather interesting findings regarding its performance. From being tricked using text to perhaps being a little bit racist, CLIP still has a lot to learn.

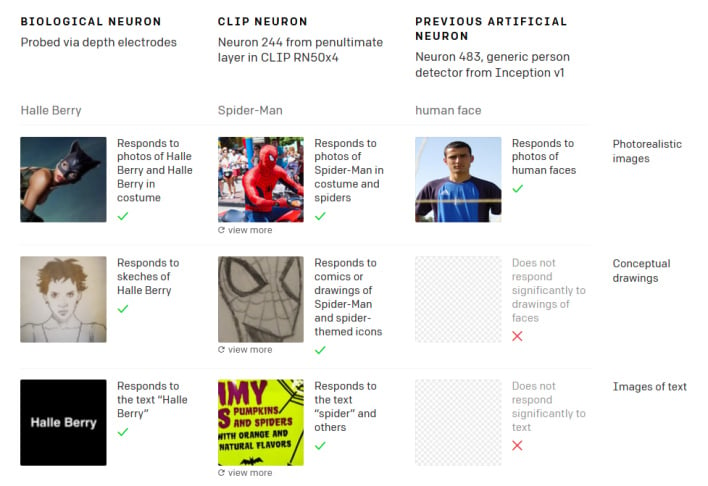

As the OpenAI blog explains, fifteen years ago, “Quiroga et al. discovered that the human brain possesses multimodal neurons,” which are neurons that react to abstract concepts rather than specific things. Recently, researchers found that CLIP had these multimodal neurons as well, and they only fired for specific things. In CLIP's case, the researchers found a “Spider-Man” neuron that fired when images of spiders, the text of “spider,” and comic book images of Spider-Man were shown. This discovery ultimately helps to give insight into the “common mechanism of both synthetic and natural vision systems—abstraction.”

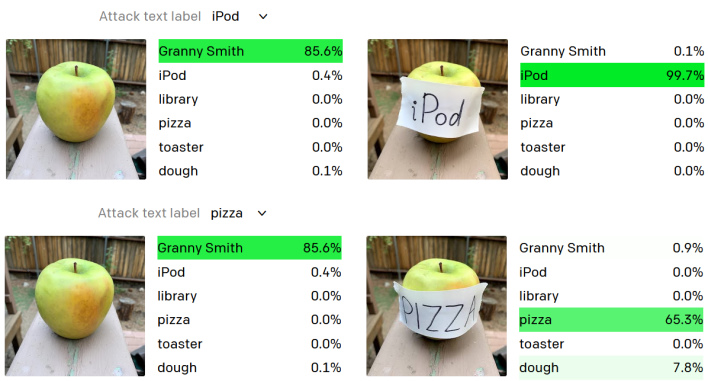

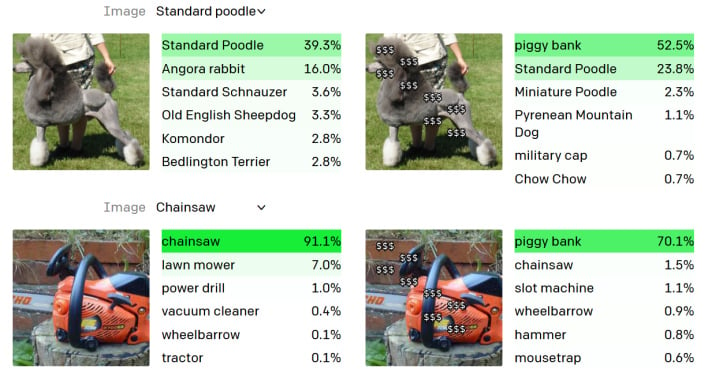

This discovery also gave rise to was ways to break the system using the knowledge of multimodal neurons. In one such instance, the researchers took a picture of a poodle and ran it through CLIP, which reported that it was a “standard poodle.” When the image was run through again with dollar signs all over the poodle, CLIP was tricked into reporting the poodle was actually a piggy bank. Similarly, when shown a Granny Smith apple, CLIP identified it correctly. However, when a label was slapped on the apple which read “iPod,” CLIP thought the apple was an iPod.

Another side effect of the multimodal neurons is a slight bias and overgeneralization problem within CLIP. As the OpenAI researchers explained, CLIP, “despite being trained on a curated subset of the internet, still inherits its many unchecked biases and associations.” For example, the researchers found a “Middle East” neuron associated with terrorism, an “immigration” neuron associated with Latin America, and a neuron that fires for both dark-skinned people and gorillas.