NVIDIA Unveils DLSS 3.5 With AI Ray Reconstruction And Not Just For RTX 40 GPUs

If you want to know how ray tracing works, it's all in the name: the renderer simulates ("traces") the bouncing of light "rays" to determine how the scene should be lit and colored. This is great, but objects are huge and light particles are very, very small. To get truly simulated lighting, you would need billions and billions of rays per screen pixel, and ain't nobody got time for that. Instead, we typically trace (or "fire") as few as one ray per pixel. In the end, this simply isn't enough data, so it produces an incredibly noisy image, like this:

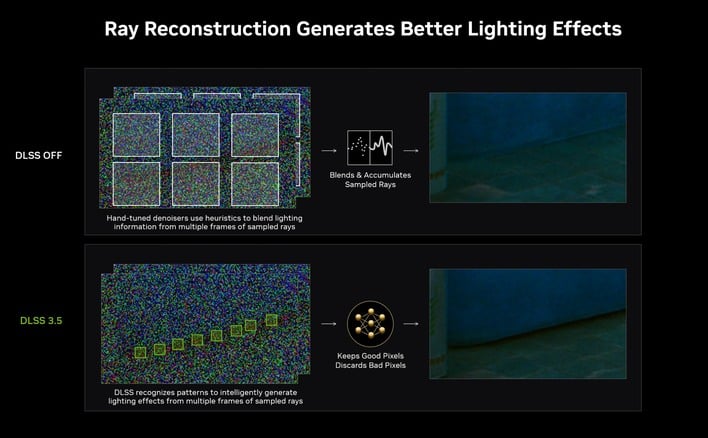

The way you get from something like that to the gorgeous ray-traced images you've seen of games like Cyberpunk 2077 is through a process called "denoising." In essence, hand-tuned algorithms carefully crafted for each type of light and each type of scene reduce the high-frequency noise into an image that looks more like what you'd expect—well, more like reality. The thing is, these denoisers really struggle at times because they're working with so little input data compared to the final scene, and this issue gets compounded when you start dealing with smart upscalers like DLSS, as you're using the same data to fill more of the screen.

Anyone who has played a path-traced game, like Cyberpunk 2077 in "Ray-Tracing Overdrive" mode or even a game with extensive RT effects like Dying Light 2: Stay Human, will be instantly familiar with ray-tracing denoising artifacts. It's ghosting of the worst sort, like your monitor is an MVA LCD from 2006. Objects leave visible trails behind them and lighting effects can take multiple frames to update the scene, which can be upwards of 100+ milliseconds if your framerate is low—easily within the threshold of "noticeable".

NVIDIA says that if you load up a game like Cyberpunk 2077 right now, a lot of the work that the ray-traced renderer is doing is actually being discarded by the denoisers, particularly in terms of global illumination and reflections. Because the denoisers are trained to reduce noise, highly-detailed effects that only appear on small regions of the screen look like noise to the relatively-simple algorithm trying to generate a coherent image out of the ray-traced noise.

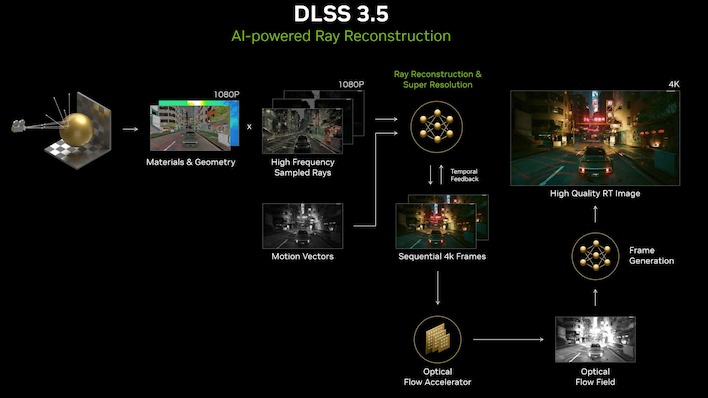

Well, NVIDIA just announced the solution to these woes: DLSS 3.5, now with a new feature called Ray Reconstruction. Put simply, it's like DLSS super resolution, but specifically for ray-tracing. If that confuses you, understand that we're talking about the process of compositing the scene before upscaling. In other words, instead of using a slower and more taxing denoiser to achieve better results, NVIDIA is side-stepping the whole process by replacing the collection of hand-tuned denoisers currently used for ray-tracing with an AI model that does it better and faster.

The AI denoiser trained by NVIDIA—apparently on five times more data than the model used for DLSS 3—is smart enough to recognize even small areas of shading and lighting, and as a result, ray-traced scenes using it are apparently sharper, more accurate, and less prone to ghosting artifacts (at least judging by NVIDIA's demo footage in its video about the technology).

By the way, Ray Reconstruction isn't just for video games. The company also demonstrated a sample of the technique at work in the popular D5 Render ray-tracing kernel, used in apps like 3D Studio Max, Blender, Cinema4D, and others. While you wouldn't necessarily need this technique for an offline render—you could instead choose to simply cast thousands of times more rays per pixel—it does give a much more accurate preview of what your scene will look like while you're working on it.

Anyone who has played a path-traced game, like Cyberpunk 2077 in "Ray-Tracing Overdrive" mode or even a game with extensive RT effects like Dying Light 2: Stay Human, will be instantly familiar with ray-tracing denoising artifacts. It's ghosting of the worst sort, like your monitor is an MVA LCD from 2006. Objects leave visible trails behind them and lighting effects can take multiple frames to update the scene, which can be upwards of 100+ milliseconds if your framerate is low—easily within the threshold of "noticeable".

NVIDIA says that if you load up a game like Cyberpunk 2077 right now, a lot of the work that the ray-traced renderer is doing is actually being discarded by the denoisers, particularly in terms of global illumination and reflections. Because the denoisers are trained to reduce noise, highly-detailed effects that only appear on small regions of the screen look like noise to the relatively-simple algorithm trying to generate a coherent image out of the ray-traced noise.

Well, NVIDIA just announced the solution to these woes: DLSS 3.5, now with a new feature called Ray Reconstruction. Put simply, it's like DLSS super resolution, but specifically for ray-tracing. If that confuses you, understand that we're talking about the process of compositing the scene before upscaling. In other words, instead of using a slower and more taxing denoiser to achieve better results, NVIDIA is side-stepping the whole process by replacing the collection of hand-tuned denoisers currently used for ray-tracing with an AI model that does it better and faster.

The AI denoiser trained by NVIDIA—apparently on five times more data than the model used for DLSS 3—is smart enough to recognize even small areas of shading and lighting, and as a result, ray-traced scenes using it are apparently sharper, more accurate, and less prone to ghosting artifacts (at least judging by NVIDIA's demo footage in its video about the technology).

By the way, Ray Reconstruction isn't just for video games. The company also demonstrated a sample of the technique at work in the popular D5 Render ray-tracing kernel, used in apps like 3D Studio Max, Blender, Cinema4D, and others. While you wouldn't necessarily need this technique for an offline render—you could instead choose to simply cast thousands of times more rays per pixel—it does give a much more accurate preview of what your scene will look like while you're working on it.

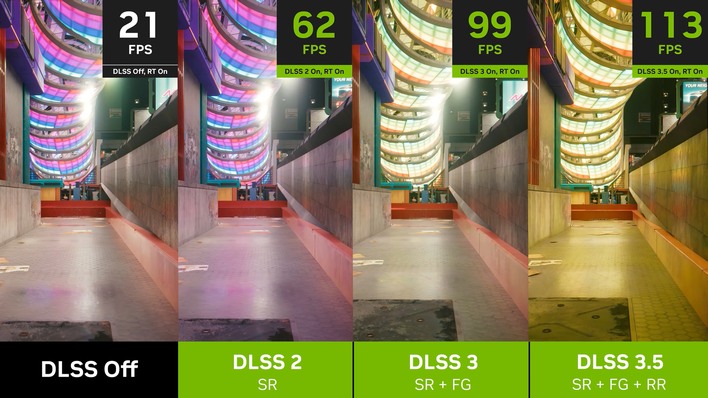

Ray Reconstruction doesn't usually help performance, but it can in scenes with lots of rays.

Despite the version number increase, Ray Reconstruction actually doesn't have anything to do with Frame Generation. Frame Generation still requires an Ada Lovelace graphics card—that's the GeForce RTX 40 series, if you didn't know—but NVIDIA says that Ray Reconstruction can be done on "any GeForce RTX GPU," presumably going all the way back to the Turing chips in the GeForce RTX 20 series. We're not sure if that's a realistic expectation given the sharp performance leap between Turing and Ampere (particularly in ray-tracing), but it's nice to know that the company hasn't left its faithful behind.

Unfortunately, this technology isn't something you can just enable in the NVIDIA control panel. Developers will have to integrate it into their games, the first of which will of course be Cyberpunk 2077's Phantom Liberty expansion launching on September 26th. If you bounced off Cyberpunk in the past, this could be a great chance to try again, as the expansion is bringing massive overhauls of the core game along with it. After that, NVIDIA says that Ray Reconstruction is coming to Remedy's upcoming Alan Wake 2, and then to its RTX Remix toolset, starting with Portal RTX.