NVIDIA Launches Tesla V100s GPU AI Accelerator With Faster Clocks And 32GB HBM2

NVIDIA has updated its compute accelerator stack with another Tesla product, the Tesla V100s. It is based on the same 12-nanometer GV100 (Volta) GPU as the rest of the Tesla V100 lineup, but comes standard with 32GB of second-generation high bandwidth memory (HBM2) and sports a faster boost clock, which together yield more overall performance.

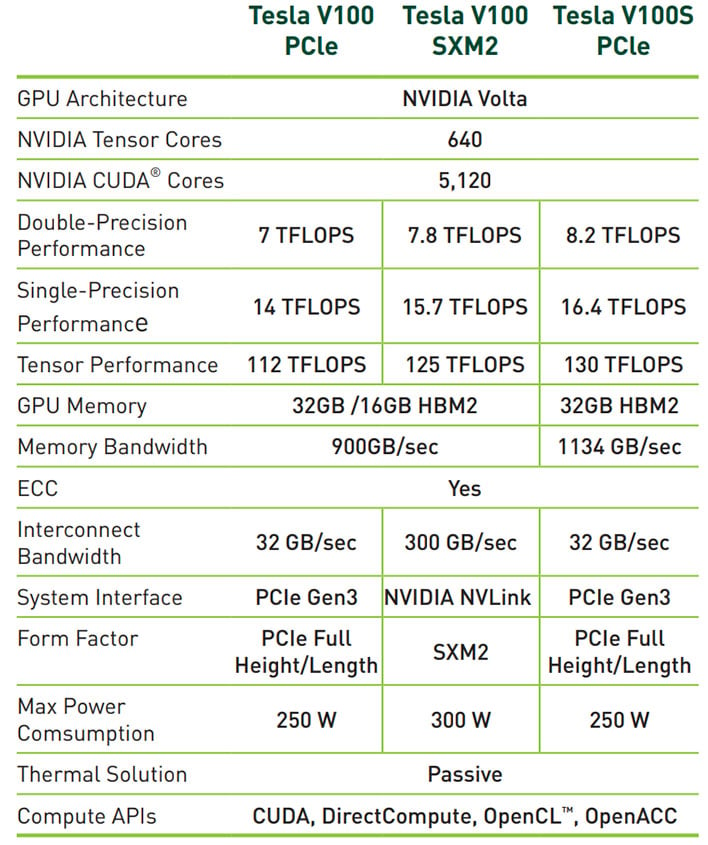

The Tesla V100s is essentially an extension of the Tesla V100. Since the the GPU underneath is unchanged, we are still looking at 5,120 CUDA cores. Performance is higher, though, due to the boost clock being jacked up to around 1,601MHz, up from 1,367MHz on the regular Tesla V100, according to Videocardz. Here's a breakdown of what that translates into...

Source: NVIDIA

For the Tesla V100s, we are looking at 8.2 TFLOPS of double-precision performance and 16.4 TFLOPS of single-precision performance, compared to 7 FLOPS and 14 TFLOPS, respectively, on the regular Tesla V100 in PCI Express form. The Tesla V100s also offers more memory bandwidth at 1,134GB/s, compared to 900GB/s.

As with the other cards, the Tesla V100s sports 640 Tensor cores. However, Tensor performance is higher on the Tesla V100s at 130 TFLOPS, up from 112TFLOPS in a PCIe card form factor. All of the rated performance metrics are also higher on the Tesla V100s PCIe compared to even the Tesla V100 in the SXM2 form factor.

"Tesla V100 is engineered to provide maximum performance in existing hyperscale server racks. With AI at its core, Tesla V100 GPU delivers 47X higher inference performance than a CPU server. This giant leap in throughput and efficiency will make the scale-out of AI services practical," NVIDIA says of the Tesla V100 product stack in general.

From NVIDIA's standpoint, its Tesla V100 products offer the same performance as up to 100 CPUs, but in a single GPU. NVIDIA has been championing the use of GPUs in data centers for quite some time, and that is not going to dissipate with the increased focused these days on machine learning and artificial intelligence.

It's not clear when the Tesla V100s will be available or how much it will cost.