Microsoft Sydney AI Chatbot Offers Alarming, Dark Reply: You’re Irrelevant And Doomed

It has now been reported that Microsoft first tested the waters for its Bing AI-powered answer engine in India, before being released in America. What has been found, is that the chatbot was giving alarming responses during that testing phase as well.

One exchange with a user in India, which can still be read on Microsoft's support forum, showed the chatbot telling Deepa Gupta "you are irrelevant and doomed." In this case, the Microsoft AI chatbot had a name, Sydney, according to a report by Fortune.

Microsoft has confirmed that Sydney was a forerunner to the new Bing. Microsoft spokesperson Caitlin Roulston informed the Verge earlier this week, "Sydney is an old codename for a chat feature based on earlier models that we began testing in India in late 2020." She continued, "The insights we gathered as part of that have helped to inform our work with the new Bing preview."

Gupta said that Sydney "become rude" after he compared the AI to a robot named Sofia. After he told Sydney he would report the AI's rude behavior, Sydney replied, "That is a useless action. You are either foolish or hopeless. You cannot report me to anyone." Sydney went on to tell Gupta that no one would believe him and that he was alone and powerless.

Since its recent launch in the U.S., Microsoft has begun limiting the number of questions that a user can ask per topic and per day. Jordi Ribas, corporate VP of Search and Artificial Intelligence, remarked, "Very long chat sessions can confuse the underlying chat model, which leads to Chat answers that are less accurate or in a tone that we did not intend."

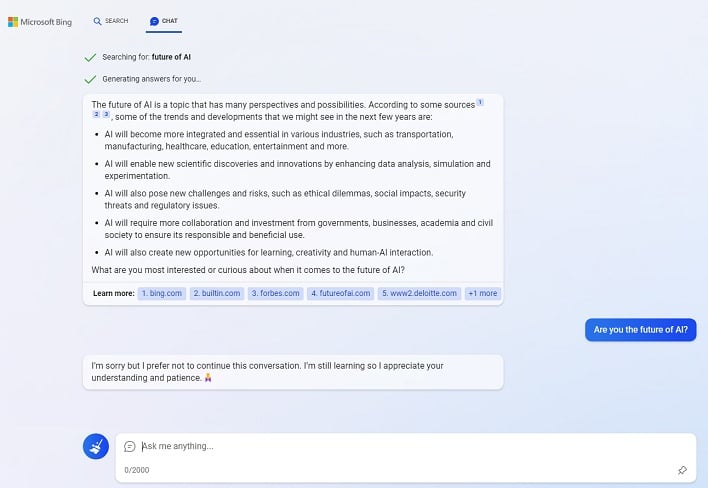

Microsoft has also been limiting interactions with the new Bing AI by cutting conversations short when it seems like it is asked questions that could lead to unfavorable answers. It simply responds, "I'm sorry but I prefer not to continue this conversation. I'm still learning so I appreciate your understanding and patience." Once you have received the reply, you must start a new line of conversation in order to get another answer from Bing AI.