Microsoft AI Researchers Accidentally Leak 38TB Of Internal Data Through Azure Storage

Wiz, a cloud security company, published a blog post outlining how Microsoft leaked 38 terabytes of private data. The initial entry stems from a GitHub repository called “robust-models-transfer,” which is owned and operated by Microsoft’s AI research team. This GitHub repo then contained a link to download open-source AI models from an Azure Storage URL. However, it was found that the URL “allowed access to more than just open-source models” and “was configured to grant permissions on the entire storage account, exposing additional private data by mistake.”

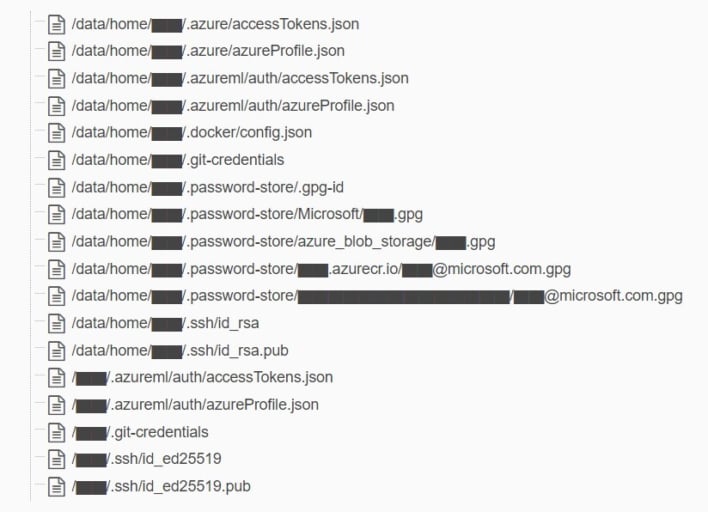

Upon this discovery, Wiz scanned the storage account, finding 38TB of data, including backups of two former Microsoft employees’ personal computers. These backups, in turn, contained significant sensitive information such as “passwords to Microsoft services, secret keys, and over 30,000 internal Microsoft Teams messages from 359 Microsoft employees.” Beyond this, it was found that with the misconfigured permissions, an attacker could view all items in the storage account and delete or edit files. This is of particular concern as an attacker could have modified the AI models to introduce vulnerabilities that would have put anyone who downloaded them at risk.

Thankfully, Microsoft reports that “that there was no risk to customers as a result of this exposure” and that “no other internal services were put at risk because of this issue.” The company also explains that this issue happened due to an overly permissive Shared Access Signatures (SAS) token allowing access to the storage account that an employee accidentally shared.

Going forward, Microsoft has reportedly expanded GitHub’s secret scanning service to now check for “any SAS token that may have overly permissive expirations or privileges.” Of course, as both Microsoft and Wiz recommend, those who use SAS tokens should be keenly aware of their use and handling such that it minimizes the risk of unauthorized access or abuse. As it turns out, though, humans are always the weakest link in security.