Intel Arc GPUs Just Brought A Big Boost To Pytorch For Llama 2

Exactly such optimizations are available for Intel's hardware, both CPUs and GPUs, through the Intel Extension for PyTorch, or "IPEX". This package extends PyTorch with optimizations that specifically target Intel's compute hardware, including AVX-512 with VNNI and AMX on CPUs as well as the Xe architecture's Xe Matrix Extensions (XMX) units.

Intel just put out a blog post detailing how it's possible to run Meta AI's Llama 2 large language model on one of its Arc A770 graphics cards. It'll have to be an A770, and the 16GB version at that, because the model requires some 14GB of GPU RAM to do its thing. However, if you have the requisite GPU, there are detailed instructions on the blog post to help you set it up.

In some ways, this could be seen as a direct response to NVIDIA's Chat with RTX. That tool allows GeForce owners with 8GB+ GPUs to run PyTorch-LLM models (including Mistral and, indeed, Llama 2) on their graphics cards. NVIDIA achieves lower VRAM usage by distributing INT4-quantized versions of the models, while Intel uses a higher-precision FP16 version; in theory, this shouldn't have much effect on the results, though.

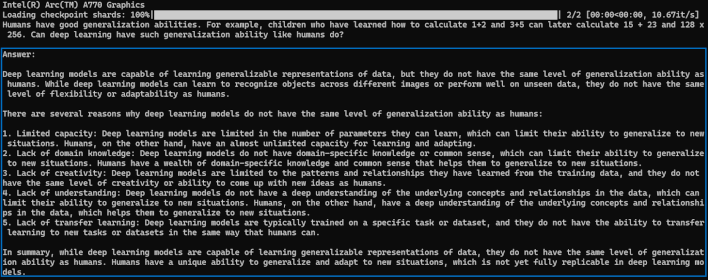

Setting up and running Llama 2 on your A770 is a bit of a process, so we won't reproduce the full steps here. Suffice to say that it involves installing the Intel oneAPI toolkit, a Conda environment, and obviously, PyTorch as well as the Intel Extension for PyTorch. Once you've got it all set up, there's unfortunately no fancy GUI interface like on Chat with RTX; instead, you send queries to the AI using Python scripts.

It's a little clumsy, but it does work; Intel provides a few different screenshots of the AI's output. Honestly, it's more of a proof of concept than anything, but such proofs are important at this stage, where many people are questioning the industry's focus on AI at a time when there still seem to be relatively few applicable use cases for end-users. A locally-hosted chatbot is very appealing to people concerned with privacy and security of their data.