Intel Announces Ivytown Servers For The Enterprise

Introduction to IvyTown

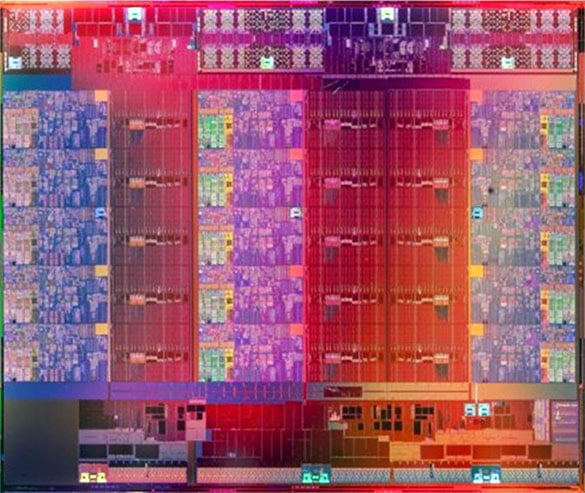

For months, there have been rumors circulating of a new Intel 15-core CPU, with a particular focus on Big Data analytics, multi-socket systems, and the enterprise market. Well, this past January, we took a trip to Intel's SAP research lab to see the new processors and the rather substantial update coming down the pipe.

Unlike Intel's mainstream and basic server products, the truly Big Iron hardware updates on a significantly slower cadence. Haswell chips launched eight months ago for desktop and the Xeon E5 v2 family, based on Ivy Bridge, has been available for months -- but the Xeon E7 processors Intel is replacing today are still based on the old Westmere core, which first debuted in consumer products back in 2010.

One of the facets of massive multiprocessor design that doesn't really get as much attention as it ought to is the difficulty of designing chips to extraordinary fault tolerances and with incredibly complex cache structures. The question of how to best distribute bandwidth inside the core, ensuring that intra-core communication doesn't cause latency spikes or odd performance-cratering corner cases, and then certifying the product to 5-6 nines of reliability is the reason why the EX family lags behind the other server parts.

The new Xeon E7 v2 processors include a suite of new features, as shown below:

The interconnect changes deserve some explaining. Westmere-EX, the old 32nm chip, still had separate I/O hubs on the motherboard, and a QPI port dedicated to each of them. The new Xeon E7 v2 moves those hubs on-die, which means the system's remaining three QPI links are still providing a significant bandwidth boost -- up to 8GT/s, from 6.4GT/s. The old Westmere-EX platforms had up to 72 lanes of PCIe 2.0 connectivity provided per socket; the new E7 v2 cores offer 32 PCIe 3.0 lanes per socket.

The entire structure of the last-level cache has been reworked, with a comprehensive ring bus incorporated across all 15 cores. Intel implemented the 37.5MB of L3 in 15 slices, which allows each core a dedicated interface to the L3. Intel claims up to 450GB/s of bandwidth per socket counting both the quad-channel memory controllers and the L3 cache. Total access latency is just 15.5ns -- faster than AMD's L2 cache on modern processors. Thanks to a new buffered memory protocol, dubbed Jordan Creek, Intel can now support three DIMMs per memory channel and support for up to DDR3-1600 as opposed to Westmere's relatively paltry DDR3-1066.

Unlike Intel's mainstream and basic server products, the truly Big Iron hardware updates on a significantly slower cadence. Haswell chips launched eight months ago for desktop and the Xeon E5 v2 family, based on Ivy Bridge, has been available for months -- but the Xeon E7 processors Intel is replacing today are still based on the old Westmere core, which first debuted in consumer products back in 2010.

One of the facets of massive multiprocessor design that doesn't really get as much attention as it ought to is the difficulty of designing chips to extraordinary fault tolerances and with incredibly complex cache structures. The question of how to best distribute bandwidth inside the core, ensuring that intra-core communication doesn't cause latency spikes or odd performance-cratering corner cases, and then certifying the product to 5-6 nines of reliability is the reason why the EX family lags behind the other server parts.

The new Xeon E7 v2 processors include a suite of new features, as shown below:

The interconnect changes deserve some explaining. Westmere-EX, the old 32nm chip, still had separate I/O hubs on the motherboard, and a QPI port dedicated to each of them. The new Xeon E7 v2 moves those hubs on-die, which means the system's remaining three QPI links are still providing a significant bandwidth boost -- up to 8GT/s, from 6.4GT/s. The old Westmere-EX platforms had up to 72 lanes of PCIe 2.0 connectivity provided per socket; the new E7 v2 cores offer 32 PCIe 3.0 lanes per socket.

The entire structure of the last-level cache has been reworked, with a comprehensive ring bus incorporated across all 15 cores. Intel implemented the 37.5MB of L3 in 15 slices, which allows each core a dedicated interface to the L3. Intel claims up to 450GB/s of bandwidth per socket counting both the quad-channel memory controllers and the L3 cache. Total access latency is just 15.5ns -- faster than AMD's L2 cache on modern processors. Thanks to a new buffered memory protocol, dubbed Jordan Creek, Intel can now support three DIMMs per memory channel and support for up to DDR3-1600 as opposed to Westmere's relatively paltry DDR3-1066.